Big data trends now shape how teams build products, fight fraud, and plan budgets. Data also supports everyday work, from customer support dashboards to supply chain alerts. Yet data stacks keep getting more complex. That complexity creates slow pipelines, rising cloud bills, and trust issues in reports. So teams want simpler platforms, faster analytics, and stronger governance. They also want AI to help, not to add more chaos. This guide breaks down the big data trends that matter most right now, with clear examples and practical takeaways you can use in real projects.

9 Key Big Data Trends For 2026

1. AI-Native Data Pipeline

AI-native pipelines push “automation-first” into DataOps. Teams stop writing endless glue code for every new source. Instead, AI assists with mapping fields, generating tests, and proposing transformations. You still set rules, but the system handles repetitive work. That shift speeds up delivery and reduces human error.

This big data trend also changes how teams work. Data engineers spend less time on brittle scripts. They spend more time on product thinking. They define data contracts. Then they design event schemas and validate business meaning with stakeholders. Those steps raise trust and cut rework.

What Changes in Practice

- AI suggests transformations, but you approve them.

- Lineage becomes a default artifact, not an afterthought.

- Teams use policy checks early, not at release time.

Example You Can Copy

Imagine an e-commerce team that ingests orders, returns, and marketing events. An AI-native pipeline can flag a sudden schema change in “discount_code.” It can then propose a safe migration plan. Next, it can generate unit tests that protect downstream revenue dashboards. That flow keeps reporting stable while the business still moves fast.

2. Real-Time and Streaming Analytics at Scale

Streaming analytics keeps moving from “nice to have” to “table stakes.” Teams want decisions in seconds, not days. Fraud detection needs immediate signals. Fleet routing needs live telemetry. Personalization needs fresh events. So event streaming becomes the backbone of modern analytics.

Streaming at scale also changes architecture choices. Teams shift from batch ETL to continuous processing. They build pipelines that handle late events, add exactly-once semantics where it matters. They also separate ingestion from consumption, so new use cases do not break old ones.

Apache Kafka shows how mainstream this has become, since more than 80% of all Fortune 100 companies trust and use it.

Practical Design Moves

- Model streams as products with clear ownership.

- Use schemas and compatibility rules to avoid breakage.

- Design “replay” paths so you can reprocess safely.

Concrete Use Case

A bank can stream card swipes, device fingerprints, and location hints. A streaming job can score risk and trigger step-up verification. Next, the system can write clean features into a feature store for model training. That loop improves both real-time decisions and future model accuracy.

3. Lakehouse – Unified Data Platform

Lakehouse design keeps erasing the line between data lakes and warehouses. Teams want one storage layer that supports analytics, BI, and ML. They also want governance and performance. So platforms blend open table formats, strong metadata, and warehouse-like query engines.

This unification reduces duplication. It also reduces “data copy sprawl.” Instead of replicating the same dataset into multiple systems, teams use one set of governed tables. Then they provide different compute paths for different workloads. That approach simplifies security reviews and speeds up onboarding.

Where Lakehouse Helps Most

- Shared KPIs that need one source of truth.

- ML training that needs large historical datasets.

- Ad-hoc exploration that must stay cost-controlled.

Example Pattern

A retailer can store clickstream, inventory, and pricing in unified tables. Analysts can run BI queries on the same data that powers demand forecasting. Engineers can also expose curated marts for specific teams. Everyone reads from consistent definitions, so debates shift from “which table is right” to “what action should we take.”

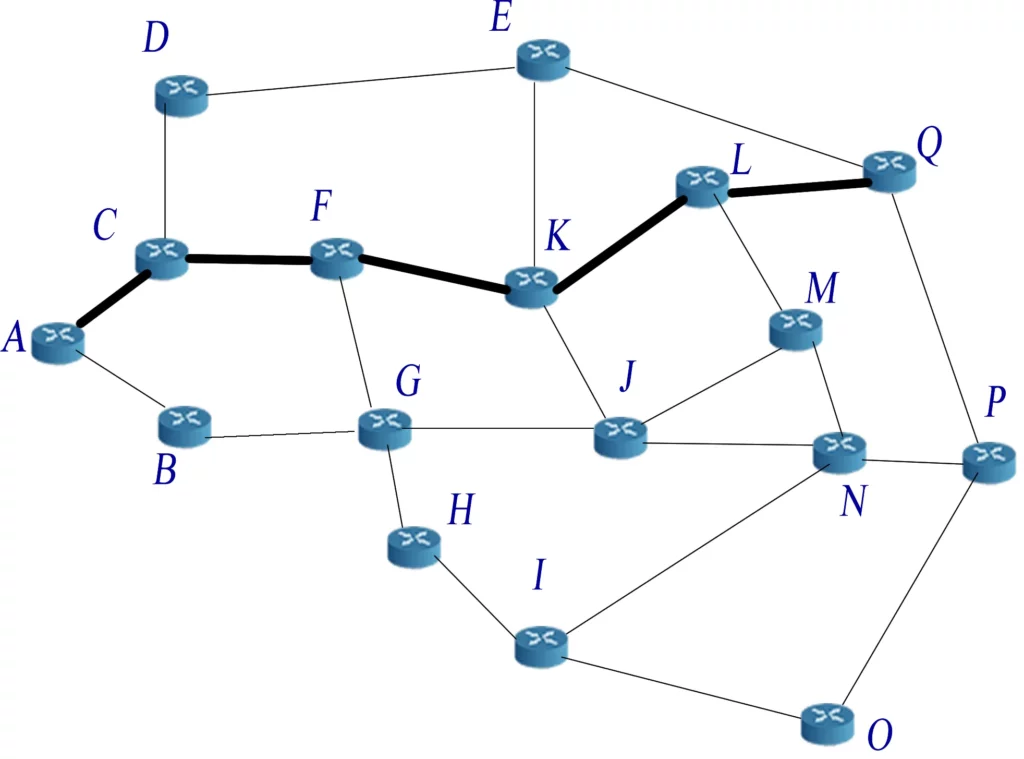

4. Data Fabric and Data Mesh

Data fabric and data mesh both attack fragmentation. They just do it in different ways. Data fabric connects distributed sources through metadata, catalogs, and policy. Data mesh shifts ownership to domain teams, so each domain publishes “data products” with clear contracts.

These approaches often work best together. A mesh can define ownership and accountability. A fabric can provide common tooling for discovery, lineage, and access control. That mix helps large organizations scale without forcing every decision through a central team.

Signals That You Need This

- Teams rebuild the same datasets in parallel.

- IT becomes a bottleneck for every new report.

- Data access tickets grow faster than delivery capacity.

Example Pattern

A marketplace can treat “Payments” and “Logistics” as separate domains. Payments publishes “settlement events” with strict schema rules. Logistics publishes “delivery milestones” with agreed definitions. A shared fabric layer makes both discoverable and governed. Then BI and ML teams can combine them without guesswork.

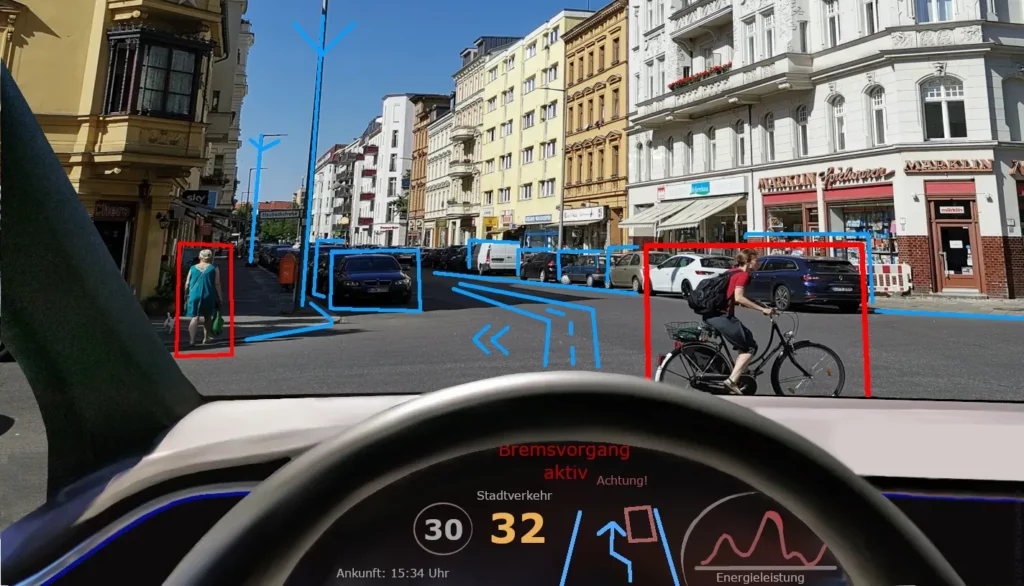

5. Edge Analytics and Physical AI

Edge analytics pushes compute closer to where data is created. That matters when latency costs money or safety. Factories need instant anomaly detection. Vehicles need rapid perception and control loops. Stores need fast video analytics for shrink prevention. So teams process data on gateways, devices, and local clusters, then sync summaries to the cloud.

Spending trends support this shift. IDC research covered by Computer Weekly projects global edge computing services spending reaching $380bn by 2028.

How to Build It Safely

- Filter and compress at the edge to cut bandwidth waste.

- Send only “decision-grade” signals to the cloud.

- Design offline-first logic for unstable networks.

Example in Smart Manufacturing

A plant can run vision models on an edge box near the assembly line. The box can detect defects in near real time. It can also log feature vectors and alerts. Later, a central team can retrain models with that labeled feedback. This loop improves yield while keeping latency low.

6. Data Observability

Data observability turns data reliability into an engineering discipline. Teams track freshness, volume shifts, schema drift, and lineage breaks. They also alert on anomalies before dashboards fail. This matters more as AI systems consume more data. Models can amplify errors fast. So teams need early warning signals.

Quality issues also cost real money. Gartner research notes poor data quality costs organizations at least $12.9 million a year on average.

What Observability Platforms Watch

- Freshness checks for critical datasets.

- Schema change detection with impact analysis.

- Distribution drift for key metrics and features.

Example Workflow

A subscription business can monitor its “active_user_events” stream. If event volume drops, the system can trace lineage to the last deployment. It can then identify a broken client SDK release. The team fixes it before revenue and retention reports go wrong. That saves time and avoids bad decisions.

7. Geopatriation and Sovereign Data

Geopatriation moves workloads and datasets to reduce geopolitical and legal risk. Sovereign data strategies focus on residency, control, and auditable access. This trend grows because laws expand and enforcement tightens. Cross-border transfers also create operational friction. So many firms choose regional clouds, sovereign cloud offerings, or local infrastructure for sensitive workloads.

Global policy pressure keeps rising. A World Bank post cites that data protection legislation has grown to 167 countries.

Data localization also shapes architecture choices. OECD research reports that by early 2023, 100 such measures were in place across 40 countries.

What This Means for Architecture

- Design for region-aware storage and processing.

- Use policy-driven access controls with strong audit trails.

- Plan for egress limits and cross-region replication rules.

Example for Regulated Industries

A healthcare provider can keep identifiable patient data in a local region. It can then send de-identified aggregates to a global analytics layer. A federated query layer can still answer cross-region questions. This setup supports innovation while keeping compliance manageable.

8. Generative Business Intelligence (GenBI)

GenBI changes how people ask questions of data. Users stop relying on complex SQL for every insight. They type questions in natural language. The system then generates queries, builds charts, and explains results. This boosts access for non-technical teams. It also speeds up analysis for experts.

Adoption momentum comes from broader AI usage. McKinsey reports Sixty-five percent of respondents say their organizations use generative AI.

What Good GenBI Looks Like

- It cites the dataset and definition behind each metric.

- It shows the generated query for transparency.

- It blocks unsafe data access by policy, not by trust.

Example for Revenue Teams

A sales leader can ask, “Which segment shows the strongest expansion signals?” GenBI can combine usage events, renewal history, and product telemetry. It can then surface a ranked list with explanations. Next, the leader can drill into drivers and share a narrative with the team. That flow turns data into action faster.

9. Confidential Computing in Big Data

Confidential computing protects data while it is in use. It uses hardware-based trusted execution environments. That means data stays encrypted even during processing. This helps when you must analyze sensitive data in shared infrastructure. It also helps with cross-company collaboration, where partners do not want to expose raw records.

Momentum keeps building. Gartner predicts more than 75% of operations processed in untrusted infrastructure will be secured in-use by confidential computing by 2029.

Where It Adds the Most Value

- Joint analytics across competitors with strict boundaries.

- Cloud analytics for regulated identifiers and secrets.

- Secure ML training on sensitive customer attributes.

Example Collaboration Model

Several banks can collaborate on fraud pattern detection. Each bank contributes signals in a protected runtime. The computation produces shared risk indicators. No bank sees another bank’s raw customer data. This approach improves fraud defenses while protecting confidentiality.

FAQs about Big Data Trends

1. How is AI Influencing Big Data Trends?

AI changes big data trends by reshaping the full lifecycle. It helps teams ingest data faster. It also improves data quality checks with smarter anomaly detection. Next, it speeds up analysis with natural language interfaces. Then it pushes new governance needs, because AI can generate and remix data at scale.

AI also forces better metadata discipline. Teams need trusted definitions. They need lineage they can explain. They need access policies that reflect risk. So AI does not replace good data engineering. Instead, it raises the bar for it.

Finally, AI changes what “value” means. A dashboard often describes the past. AI systems can recommend actions. That shift makes reliability and observability non-negotiable. It also makes real-time signals more important than ever.

2. Are Big Data Platforms Becoming More Expensive?

Costs can rise, but waste causes many surprises. Teams often pay for duplicated datasets, idle compute, and ungoverned experimentation. They also pay for emergency fixes when pipelines break. So platform cost reflects both technology and process.

Cloud spending signals the scale of the market. Gartner forecasts worldwide public cloud end-user spending at $723.4 billion in 2025.

Teams can control costs with a few habits: set data retention rules, tier storage, schedule jobs with demand, and adopt FinOps practices for data workloads. Most importantly, they can standardize metrics and tables so teams stop rebuilding the same thing.

Big data trends now favor speed, simplicity, and trust. AI-native pipelines reduce busywork. Streaming analytics unlocks instant decisions. Lakehouse platforms cut duplication. Data fabric and data mesh reduce fragmentation. Edge analytics brings intelligence closer to reality. Data observability protects decision quality. Sovereign data strategies reduce geopolitical risk. GenBI makes insights easier to reach. Confidential computing strengthens collaboration without exposing secrets. Choose the trends that match your risk level and your business goals, then build step by step with clear ownership and strong governance.

Conclusion

Big data trends now force teams to rebuild pipelines, governance, and analytics at the same time. So, smart leaders treat data work like product work. They begin to set clear owners, standardize tools and ship improvements in small steps.

As a leading software development company in Ho Chi Minh City, Vietnam, founded in early 2013, we turn those shifts into practical roadmaps. At Designveloper, we will help you build modern data stacks that fit your reality, map your sources as well as design the lakehouse layer. We implement streaming where it matters. Then we add data quality, lineage, and access controls so teams can trust what they see.

Results matter, so we measure delivery, not hype. We have logged more than 200,000 working hours, and we keep quality high through repeatable engineering practices. Clients also rate us highly, including 4.9 (9) on Clutch, which reflects consistent delivery across real projects.

Our portfolio proves we can ship large, data-heavy products end to end. We built HANOI ON launched on July 10, 2024 as a unified digital ecosystem that supports modern content discovery across channels. We also deliver platforms like Lumin, Joyn’it, and Swell & Switchboard, where performance, reliability, and user experience must work together. That same discipline helps when you roll out AI-native pipelines, GenBI, or confidential analytics that cannot leak sensitive data.

If you want a data platform that stays fast, trusted, and compliant, we can help. Tell us your goals and constraints. We will propose an architecture, an execution plan, and a realistic timeline. Then we will build, test, and iterate with your team until your data products drive decisions with confidence.