This blog explores key ethical issues in AI and their potential impact on your business. We highlight best practices to avoid these issues in AI development, such as ensuring fairness, protecting privacy, and maintaining transparency. Additionally, we discuss global AI regulations, including the EU AI Act, and how following these guidelines can help you create ethical AI systems that build trust and ensure global standards.

ChatGPT had 1 million users within the first five days of its launch.

The massive success of ChatGPT has brought enormous attention to AI technology worldwide and changed the way we look at AI technology.ChatGPT has not only set trends for artificial intelligence but also highlighted the transformative potential of AI in shaping businesses, as seen in the latest AI and machine learning trends.

As the world has recognized the potential of Artificial intelligence, every business wants to integrate AI technology into its operations. This trend makes addressing ethical challenges in AI essential to ensure responsible adoption.

According to Forbes, businesses worldwide believe that Artificial intelligence will help increase their overall productivity. 72% of businesses worldwide have adopted AI for at least one business operation.

This growing confidence in AI highlights the importance of ensuring AI accountability in communications, particularly in critical sectors like telecommunications. Ensuring AI ethics in VoIP systems is crucial to responsibly maximizing AI benefits.

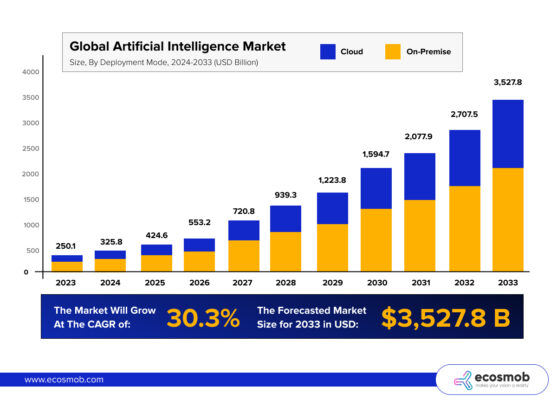

Furthermore, the size of the Artificial Intelligence market was estimated at USD 177 billion in 2023, with a projected compound annual growth rate (CAGR) of 36.8%.

AI helps businesses reduce human error in daily operations, analyze data, make faster data-driven decisions, automate repetitive tasks, and increase productivity.

But as the saying goes, every coin has two sides. While AI offers numerous benefits, what are its potential downsides?

But as the saying goes, every coin has two sides. While AI offers numerous benefits, what are its potential downsides?

Although integrating AI into business operations can give you a competitive advantage, mistakes can be highly costly in terms of business reputation, revenue growth, or even people’s lives. This wide adoption of AI also highlights the need to address problems with artificial intelligence, such as biases and accountability.

In this article, we will discuss AI ethics, the key ethical issues in AI, best practices for implementing AI ethics, and how following global AI regulations can help avoid these issues.

What Is AI Ethics?

AI ethics are like a guidebook for avoiding ethical issues with AI and ensuring responsible implementation of the technology. They tell developers or organizations how to approach the development and use of artificial intelligence technology responsibly while addressing important AI issues along the way.

They contain a set of principles that will help you implement AI technology safely and ethically in your business, mitigating AI ethical challenges in the process.

One notable example of AI ethics is the EU Artificial Intelligence Act (EU AI Act), which the European Union introduced. The EU AI Act contains ethical guidelines for developing AI systems to ensure global safety standards.

Following AI ethics in the EU AU Act can help you avoid AI ethical issues like Bias, transparency, and data privacy in AI.

Let’s go deep into ethical issues in AI, including considerations for AI ethics in VoIP systems, and understand their impact on your business.

Smart AI, Smarter Decisions

What Are the Ethical Issues of Artificial Intelligence?

Following AI Ethics will help you ensure your business remains in good standing from a regulatory and reputational viewpoint while effectively addressing ethical problems with AI. Here are essential AI issues and facts that you should be aware of:

1) Bias in AI

Do you know how AI works? AI models are trained on data using Machine Learning algorithms. ML algorithms help AI recognize patterns in data, analyze them, and learn from them. This is how AI models build intelligence.

But what happens if the data is inaccurate or biased?

In 2018, tech giant Amazon developed an AI Tool for business hiring. They used AI to reduce hiring managers’ efforts to scrape through applicant data and help them make faster and more efficient hiring decisions.

However, because algorithms were biased, this tool was biased against women. When tested in real-world scenarios, it was found that this AI tool favors male applicants over female candidates in ranking scores. This tool highlighted significant ethical challenges for AI, such as gender discrimination in automated decision-making.

2) Privacy in AI

AI models are trained on data to learn and build intelligence, but where does this data come from?

AI companies use both internal and external sources to build training data. Internal sources include data gathered from customers’ searches, histories, and purchases. External data is often bought from other businesses that collect it from their users, which is legal.

Reddit is one of the most famous examples of companies that sell user data.

Are you on Reddit? If so, your data might be sold and used to train tools like ChatGPT.

This can lead to significant problems with artificial intelligence, creating trust issues and growing concerns about data privacy.

3) Transparency in AI

Transparency in AI refers to being clear and open about how your AI model works, including the machine learning development process, the algorithms used, and the data sources used to train the algorithms.

AI is no longer only about chatbots where the use case is restricted to mere assistance. It’s being deployed across industries like healthcare, finance, and telecommunications, where AI accountability in communications plays a critical role in ensuring ethical practices.

Want to learn more about how AI is transforming telecommunications? Read our in-depth blog on AI-powered innovations in the telecom industry.

However, as we have discussed, AI results are based on the data on which it was trained.

Can you trust AI results in an industry like healthcare, where a small mistake can impact someone’s life? No.

Therefore, maintaining transparency in AI development is crucial to building trust and ensuring accountability throughout the development process.

4) AI and Jobs

When AI tools like chatgpt were introduced to the world, perceived them as adversaries poised to replace humans by performing tasks with greater precision and at a lower cost.

According to a report by the World Economic Forum, AI is projected to displace 85 million jobs globally. While this statistic may seem alarming, it underscores the growing ethical concerns about artificial intelligence’s impact on employment.

This is true in some sense, but if we look back into history, many innovations eventually removed the need for specific job types worldwide, providing examples of ethical AI problems that industries have faced.

Let’s take an example: years ago, before the vehicle revolution, people used to carry horses or rent a horse service to travel. As the vehicle revolution started, these jobs became irrelevant in the world. But at the same time, this innovation forced people to adapt vehicles for taxi services, creating new jobs, such as vehicle drivers.

In terms of AI, yes, there will be jobs where AI will automate operations and remove the need for human resources. However, industries like telecommunications that explore Responsible AI in VoIP ensure automation creates opportunities rather than eliminating the very need for human intervention. Hence, this is a new opportunity for AI engineers, AI developers, and many more jobs related to the AI industry.

5) Security in AI

The rapid adoption of AI and ML worldwide created a massive demand for data to train AI models. As the market responds to this surge in demand, data becomes increasingly valuable and expensive.

This data includes the customer’s name, email, purchase history, card details, and bank details. This raises grave ethical concerns about artificial intelligence regarding data privacy and misuse.

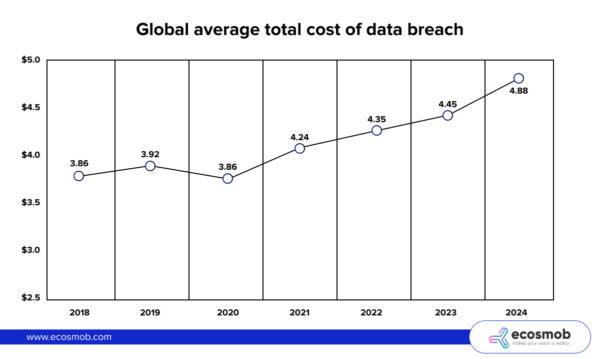

The global cost of data breaches averaged $4.88 million over the past year, and 74% of IT security professionals report that their organizations suffer significant impacts from AI-powered threats (Darktrace). This is crucial for sectors like telecommunications, where applying responsible AI in VoIP can help reduce these risks.

In 1992, during a press conference, the CEO of Sun Microsystems said that -“In this era of the internet, There is no such thing as online privacy.”

In 1992, during a press conference, the CEO of Sun Microsystems said that -“In this era of the internet, There is no such thing as online privacy.”

So, how safe is your AI System? How can you keep your customers’ online privacy secure?

Let’s discuss it at length in the next section and understand the best practices you can implement in your AI development process to keep your customers’ online privacy safe and secure.

Top 5 Best Practices for Avoiding Ethical Issues in AI

The adoption of AI in business operations shows significant benefits for businesses. But at its worst, AI can harm your business branding and customer relationships. Here are some best practices to avoid ethical issues in AI.

1) Mitigating Bias in AI

Biasness is one of the most common ethical AI issues that has been seen in AI systems. To avoid this issue, you should monitor the data collection process and validate the training dataset before using it for AI model training.

To validate data, you can conduct regular audits of the training dataset to identify and correct any biased information. This is especially helpful in preventing AI-driven VoIP challenges related to communication biases in telecommunication systems. Implementing this practice will help you avoid business issues in your AI systems.

2) Protecting Privacy in AI

Be transparent about what data will be collected and how it will be used to train AI models. You should always obtain consent from the users before collecting and using their data to build a training dataset for AI model training.

This helps in maintaining the privacy of your users and creating a trustworthy business for them.

3) Ensuring Transparency in AI

To ensure transparency, you should maintain clear documentation of your AI systems. Explain how your AI systems work, which ML algorithms are being used, and what are your training data sources.

Implementing this practice in your AI development ensures transparency about your work. It is crucial in addressing AI-driven VoIP challenges, where transparency is crucial for maintaining trust in business communication.

4) Addressing AI’s Impact on Jobs

Rather than focusing on job replacement by AI, you should invest in training your team for new roles needed for AI development. Build AI systems to enhance the capabilities of humans rather than replace them.

5) Strengthening Security in AI

To ensure the security of your AI systems, prioritize strong cyber security practices to protect them from data breaches and malicious attacks.

You should conduct regular security audits to identify and fix bugs in your AI systems and address ethical concerns with artificial intelligence. Encrypt sensitive data used in AI development and follow security protocols.

Global AI Regulations and Laws You Must Know

Violating AI regulations can result in heavy financial losses for your company and harm your business reputation. In industries like telecommunications, AI-driven VoIP challenges require specific regulatory compliance. Therefore, in this AI era, understanding global AI regulations and Laws is not just a competitive advantage but a legal requirement.

Let’s explore some crucial regulations to keep your AI development ethical and compliant globally.

1. AI regulation in Europe

a) The EU AI Act

The EU AI Act was created by the European Commission in April 2021 to regulate the development of AI systems in Europe and adhere to AI ethics principles. It is one of the earliest AI regulations in the world by the European Union. It follows a risk-based method, where AI systems are classified as high-risk and low-risk.

The EU AI Act ensures that AI Development must maintain transparency and accountability to build ethical AI systems. Following the European Union Artificial Intelligence Act( EU AI Act), you can build an ethical AI system with global standards.

b) The General Data Protection Regulation (GDPR) Law

It is essential for telecom companies to adopt Responsible AI in VoIP practices to ensure customer data protection and compliance. To protect the privacy of people’s data, the European Union has introduced the GDPR law.

This law focuses on how customers’ data is collected and processed for AI model training. The General Data Protection Regulation applies to businesses that collect and process personal information of European customers.

If you need more information, read this article: What is GDPR? Compliance and conditions explained.

2. AI regulation in the United States

a) The AI Bill of Rights

The United States proposed a framework called the AI Bill of Rights to regulate the development of AI system responsibility. It consists of principles for developing and using AI systems while protecting individual rights and privacy for addressing key ethical concerns with artificial intelligence like bias, accountability, and data misuse.

This framework ensures security measures that should be implemented for all AI systems that can significantly affect individual people.

b) The Algorithmic Accountability Act

The United States proposed the Algorithmic Accountability Act in March 2022. This act aimed to maintain the transparency of machine learning algorithms used by companies to train AI models and force companies to maintain accountability for their AI systems’ output.

c) The Automated Employment Decision Tools Law

This law states that if your company uses AI technology-based hiring tools, you are bound to conduct regular audits of the AI systems to check whether the results are biased toward a specific subject.

Automated Employment Decision Tools ( AEDT) law also suggests that companies should evaluate these audits yearly and publish the results in the public domain.

3. AI regulation in Asia

a) China

China introduced the Data Security Law in September 2021 to standardize data handling activities in China-based companies. This includes collecting, storing, and transferring Chinese users’ data. It was the first national-level personal information protection law in China.

b) Japan

At the time this blog was written, Japan did not have new strict regulatory bodies for AI development; instead, it indirectly implements AI regulation with existing laws.

In 2019, the Japanese government released the Social Principles of Human-Centric AI Systems as guidelines for AI integration in society. These Principles promote values and commitment to accountability for AI development.

c) Singapore

In January 2019, the Singapore government launched the Model AI Governance Framework to regulate AI Companies in Singapore. This framework guides businesses in using and developing AI systems ethically and aims to recommend responsible AI practices for businesses in Singapore.

Breaking these laws and regulations may result in high fines, with penalties up to 3% of your annual turnover or some XYZ amount according to industry standards, whichever is higher.

However, this is just the tip of the iceberg. Respective governing regulatory bodies can amend existing laws and introduce new regulations for AI technology, meaning that minor modifications and additional rules can be introduced in the future.

In conclusion, artificial intelligence has incredible potential to revolutionize businesses and boost productivity, but its rapid growth also brings serious ethical challenges. Issues like bias, lack of transparency, data privacy concerns, and job impacts are ethical concerns with artificial intelligence that can’t be ignored if we want AI to truly benefit society.

Businesses can build trust and use AI responsibly by adopting ethical practices, like following frameworks like the EU AI Act or creating guidelines. Addressing these challenges allows us to utilize AI’s power for good, ensuring it becomes a tool that drives progress and makes life better for everyone.

Want to ensure your AI is both powerful and ethical?

At Ecosmob, we provide AI/ML solutions that align with global standards.

Contact us today to learn how we can help you implement ethical AI with confidence.

FAQs

What are the key ethical issues in AI?

The key ethical issues in AI include bias, transparency, data privacy, and job displacement. These issues can impact your business branding and reputation if not handled carefully.

How can businesses avoid bias in AI systems?

You can avoid bias in your AI systems by regularly auditing and validating training data to identify and correct bias issues.

What is the EU AI Act?

The EU AI Act provides guidelines for the responsible development and use of AI systems, ensuring they are created and deployed ethically and safely. It applies to businesses involved in AI development to maintain transparency and global standards.

What is AI transparency and why is it important?

AI transparency refers to being open about how your AI systems work and the data used in AI model training. It’s crucial for building trust, especially in industries like healthcare and finance.

What are the best practices for developing ethical AI?

To develop ethical AI, you should audit data to reduce bias, ensure transparency in the training algorithms, and prioritize user consent for privacy. Also, clear documentation of AI systems and strong security measures are essential.