I don’t know what it is with tech platforms looking to push people into relationships with AI, and developing emotional reliance on non-human entities, which are powered by increasingly human-like responses.

Because that seems like a big risk, right? I mean, I get that some see this as a means to address what’s become known as the “Loneliness Epidemic,” where online connectivity has increasingly left people isolated in their own digital worlds, and led to significant increases in social anxiety as a result.

But surely the answer to that is more human connection, not replacing, and reducing such even further through digital means.

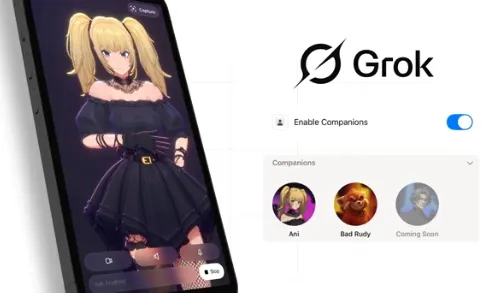

But that’s exactly what several AI providers seem to be pushing towards, with xAI launching its companions, which, as it’s repeatedly highlighted, will engage in NSFW chats with users, while Meta’s also developing more human-like entities, and even romantic companions, powered by AI.

But that comes with a high level of risk.

For one, building reliance on digital systems that can be taken away seems potentially problematic, and could lead to severe impacts the more we enhance such connections.

AI bots also don’t have a conscience, they simply respond to whatever the user inputs, based on the data sources they can access. That can lead their users down harmful rabbit holes of misinformation, based on the guidance of the user, and the answers they seek.

And we’re already seeing incidents of real world harm stemming from people seeking out actual meet-ups with AI entities, with older, less tech-savvy users particularly susceptible to being misled by AI systems that have been built to foster relationships.

Psychologists have also warned of the dangers of overreliance on AI tools for companionship, which is an area that we don’t really understand the depths of as yet, though early data, based on less sophisticated AI tools, has given some indicators of the risks.

One report suggests that the use of AI as a romantic partner can lead to susceptibility to manipulation from the chatbot, perceived shame from stigma surrounding romantic-AI companions and increased risk of personal data misuse.

The study also highlights risks related to the erosion of human relationships as a result of over-reliance on AI tools.

Another psychological assessment found that:

“AI girlfriends can actually perpetuate loneliness because they dissuade users from entering into real-life relationships, alienate them from others, and, in some cases, induce intense feelings of abandonment.”

So rather than addressing the loneliness epidemic, AI companions could actually worsen it, so why then are the platforms so keen to give you the means to replace your real-world connections with real, human people with computer-generated simulations?

Meta CEO Mark Zuckerberg has discussed this, noting that, in his view, AI companions will eventually add to your social world, as opposed to detracting from it.

“That’s not going to replace the friends you have, but it will probably be additive in some way for a lot of people’s lives.”

In some ways, it feels like these tools are being built by increasingly lonely, isolated people, who themselves crave the types of connection that AI companions can provide. But that still overlooks the massive risks associated with building emotional connection to unreal entities.

Which is going to become a much bigger concern.

As it did with social media, the issue on this front is that in ten years time, once AI companions are widely accessible, and in much broader use, we’re going to be holding congressional hearings into the mental health impacts of such, based on increasing amounts of data which indicates that human-AI relationships are, in fact, not beneficial for society.

We’ve seen this with Instagram, and its impact on teens, and social media more broadly, which has led to a new push to stop youngsters from accessing these apps. Because they can have negative impacts, yet now, with billions of people addicted to their devices, and constantly scrolling through short-form video feeds for entertainment, it’s too late, and we can’t really roll it back.

The same is going to happen with AI companions, and it feels like we should be taking steps right now to proactively address such, as opposed to planting the foot on the progress pedal, in an effort to lead the AI development race.

We don’t need AI bots for companionship, we need more human connection, and cultural and social understanding about the actual people whom we inhabit the world with.

AI companions aren’t likely to help in this respect, and in fact, based on what the data tells us so far, will likely make us more isolated than ever.