You may have heard of FAISS, but do you know what it is mainly used for and how it works? In this article, we’ll offer you all the fundamentals to learn about this library. Our guide will help you answer the big question: What is FAISS, and how can you use the library for effective similarity search? Keep reading and exploring!

What is FAISS (Facebook AI Similarity Search)?

FAISS stands for Facebook AI Similarity Search. It’s an open-source library developed by the Meta AI (formerly Facebook) team to support similarity searches within large datasets, which can contain millions or billions of vectors. FAISS offers a wide range of indexing choices (e.g., flat or hierarchical), allows for ANN (Approximate Nearest Neighbor) search methods, and accelerates GPU performance. This enables the library to facilitate and speed up similarity search.

Contrary to common thought, FAISS is not a full vector database. You can say it’s a super-fast indexing and searching engine that focuses only on finding embeddings most similar to a query vector. For this reason, it doesn’t handle other database tasks, like managing users or storing data persistently.

As a programming library, FAISS allows you to call its functions directly using supported languages like Python or C++. Besides, it operates on one computer (CPU or GPU) by default. So if your data is larger than what a machine can process, FAISS can’t automatically spread data and search work across various machines. In this case, we advise you to create distributed systems on top of this library to handle your massive data volume efficiently.

Key Features of FAISS

FAISS is a popular library for high-speed and memory-efficient searching, although its market share now is quite modest (0.01%). It proves powerful with the core capabilities as follows:

- Speed: FAISS mostly focuses on ANN search instead of checking every vector. Further, it uses a variety of indexing techniques (“data structures”) and optimized algorithms to accelerate similarity search, regardless of your data size.

- Accuracy: Compared with brute-force (“exact keyword match”) search, the ANN searching methods of FAISS return less accurate results. However, FAISS allows you to balance accuracy, speed, and memory usage through its diverse indexing methods. For instance, Flat indexing brings 100% accuracy in search results but works best for small datasets. Other index techniques, like IVF or PQ, can give you rapid, less precise results or slow, more accurate responses based on your requirements.

- Versatility: FAISS offers different distance metrics, like Euclidean or inner-product, to customize the search process to your ultimate demands. Further, its high-speed and memory-efficient capabilities make it applicable in various use cases, like information retrieval, image and video search, anomaly detection, data deduplication, and more.

What is an Index in FAISS?

Before diving into the inner mechanism behind FAISS, we want to mention a very important concept in FAISS – that’s an index.

In FAISS, an index is a specialized data structure that stores embeddings and defines how the vectors are searched. Think of it as an organization method in a library to help a librarian look for books he wants more quickly and efficiently.

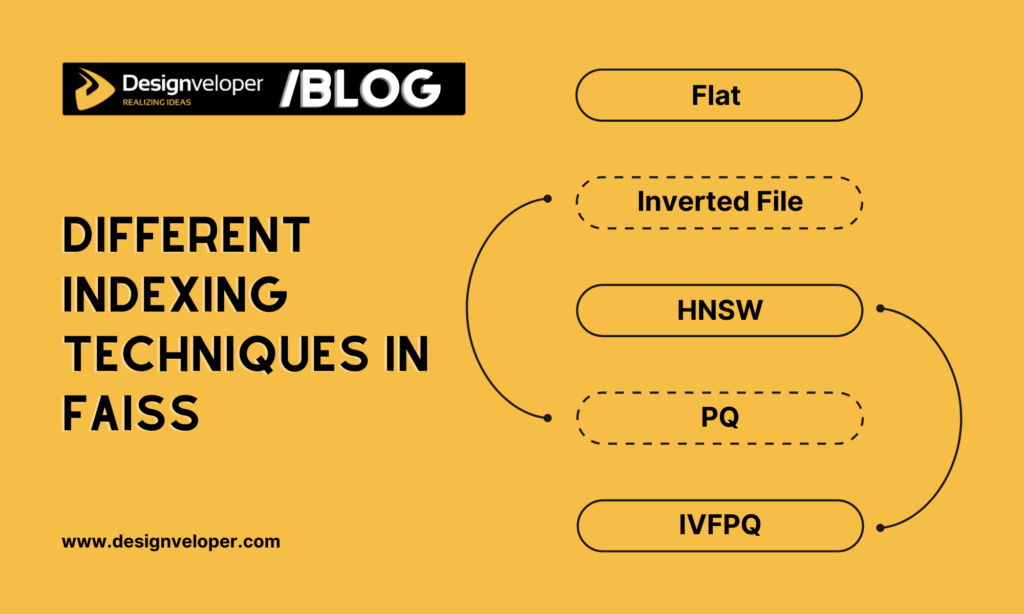

FAISS supports a wide range of indexing structures that we will explain further in the next section. They include Flat, Inverted File, Product Quantization, and Hierarchical Navigable Small World (HNSW).

You can build an FAISS index with appropriate parameters (e.g., vector dimensionality or vector/cluster number). After you feed a FAISS index with dense vectors (that represent the relationships and meaning of data), use `index.search(query, k) to look for nearest neighbors and their distances.

Different Indexing Techniques of FAISS

Below are several common indexing structures FAISS supports. Each comes with different features and use cases. Keep reading to see what they are, how to build one in FAISS, and when to use them.

Flat Index

FAISS is said to mainly support ANN search. In other words, the library often looks for the most similar or relevant subset instead of checking every vector. Further, most people use FAISS for ANN search and fast retrieval, but the library still supports Flat index (brute-force search) for finding exact matches when needed. This search method ensures much higher accuracy and proves most effective in sifting through small datasets.

Flat indexes often store all embeddings. These indices leverage the Linear search to calculate the distance between a query vector and each stored embedding to find the nearest neighbors. There are two popular flat indexing structures in FAISS. IndexFlatL2 measures the Euclidean (straight-line) distance between two points. Meanwhile, IndexFlatIP uses the Inner product to compute how much two embeddings point in the same direction.

As Flat indexes check all the vectors, they ensure 100% accuracy, but unsurprisingly, perform more slowly than ANN searches. So, Flat indexes are only suitable for small datasets with no more than 100K vectors.

Inverted File Index

In FAISS, Inverted File (IVF) indexes group vectors into clusters or buckets so that a machine only finds the top-K (most relevant) subsets for a query vector. This will reduce comparisons significantly, accelerate similarity search, and save memory usage.

IVF uses different algorithms for clustering, indexing, and searching.

- Quantization: Use K-means clustering to split the entire vector space into different clusters (also called “Voronoi cells”). Each cluster has a representative point (“centroid”) and the vectors are stored in a bucket whose centroid they’re closest to.

- Inverted File Structure: FAISS stores a list of vectors inside each cluster.

- Search Process: When a user sends a query, FAISS looks for the most similar centroid to the query vector. Then, the library continues to search only inside the chosen cells and uses different approaches (e.g., Euclidean or inner product) to compute similarity.

IVF is ideal when you want to process large-scale datasets, reduce computational power, and save memory.

HNSW Index

Technically, HNSW (Hierarchical Navigable Small World) indexes create a multi-layer graph with connections and follow these links to search for the nearest neighbors faster.

In other words, these indices will consider stored embeddings as “nodes” and connect those that are close to each other in the vector space. On the top layer of the graph, only a few nodes represent embeddings that stay farther from each other. Meanwhile, lower layers keep embeddings that are very near to one another.

HNSW enables ANN search by first choosing a top-layer node that is closer to a query vector. Then, it travels down to lower-layer nodes to find the nearest neighbors.

HNSW proves quite precise and scales smoothly for massive datasets. Therefore, it’s best for large-scale applications and high-dimensional data that require rapid retrieval.

Product Quantization

Product Quantization (PQ) is a common data compression method to store vectors efficiently in FAISS. It aims to reduce vector size for less space usage and quicker distance measures. Therefore, it works best if you have huge amounts of vectors, and storing all the embeddings in full precision consumes a lot of memory.

With PQ, FAISS divides each vector into chunks and uses k-means clustering to build a codebook of typical patterns (“centroids”). PQ indices just record the centroid that each chunk is nearest to, rather than keeping every dimension of the original vector. For example, each vector has 128 dimensions. Instead of recording all these 128 numbers, PQ splits the vector into 8 small chunks of 16 dimensions each, labels each chunk with a representative code, and keeps just 8 centroids in memory.

When a user query arrives, PQ also cuts it in the same way and searches for the nearest codes in each codebook. This approach not only saves memory but also enables fast lookups, making it effective for sifting through very large datasets with limited memory. However, PQ can return less accurate results as it only focuses on the closest centroids instead of looking for the exact original embeddings. Therefore, you should adjust the number of chunks or centroids to balance accuracy, speed, and memory.

IVFPQ

Inverted File with Product Quantization (IVFPQ) combines the two indexing structures we mentioned above.

It works by first dividing the dataset into clusters using clustering algorithms like k-means. IVFPQ continues splitting each embedding inside each bucket into smaller pieces and assigning each chunk with the closest centroid from its own PQ codebook. Then, you can build an IVFPQ index to store the cluster centroids and the quantized PQ codes for each embedding.

When a query comes, its vector will be divided and quantized in the same way. The system will compare the query to the cluster centroids to identify the most similar buckets and then measure the distance between the query’s compressed codes and the quantized vectors only in those chosen clusters to find the nearest neighbors.

How Does FAISS Work?

As we mentioned, FAISS is a high-speed search engine. Therefore, its workflow mainly revolves around indexing and finding vectors.

The first step to work with FAISS is to build an index – a specialized data structure that defines how to store and look for vectors. Choosing a suitable index type (like `IndexFlatL2` or `IndexIVF`) depends much on your dataset size, memory limits, and speed & accuracy requirements. For example, for small datasets, you can leverage `IndexFlatL2` to keep all vectors in memory and check each vector to return more accurate results. However, for large datasets, `IndexIVF` is a better option. This indexing structure clusters vectors into subsets and enables FAISS to look for clusters most relevant to a query.

Once you’ve built the index, add vectors to it using `index.add(vectors)`. Note that FAISS is not a full vector database. So, it does not support the integration of external embedding models. Therefore, when building a complete system using FAISS as the core search engine, you can add any embedding model like CLIP, depending on your data types, to get high-dimensional vectors. When FAISS receives numerical vectors, it will organize vectors in an easy-to-search way. Then, the library uses search algorithms (e.g., Flat, IVF, or HNSW) to look for semantic meanings with very high accuracy.

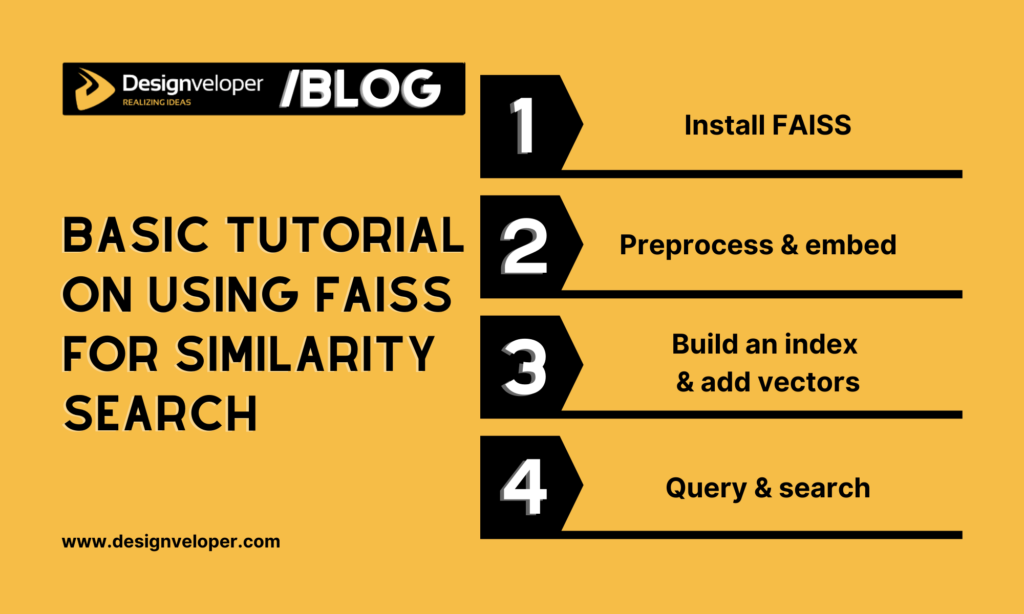

Basic Tutorial on Using FAISS for Similarity Search

So, how can you leverage FAISS for similarity search? The steps below offer you a basic guide to start with this open-source library:

Step 1: Install FAISS

First, you need to download and install FAISS with the following command lines, depending on whether you’re using CUDA-capable GPU hardware or CPU only:

# Install FAISS for GPU

pip install faiss-gpu

# Install FAISS for CPU

pip install faiss-cpu

Step 2: Preprocess & Embed

Before using FAISS, you have to transform the raw data into embeddings. We want to say that FAISS doesn’t have built-in embedding models. So, you need to choose a pre-trained model like OpenAI, CLIP, or Sentence Transformers, depending on your data types (e.g., text, images, or audio). Then, load your data into the embedding model and generate vectors.

Step 3: Build an FAISS index and add vectors

Now, you already have vector embeddings. We’ll continue to build an FAISS index and load the vectors into it. Choose the number of vectors (N), the dimension of each vector (d), and your preferred type of index. If you’re new to FAISS, we advise you to start with a Flat index and perform an exact keyword search with small datasets. For example:

import numpy as np

import faiss

dim = len(embeddings[0])

faiss_index = faiss.IndexFlatIP(dim)

faiss_index.add(np.array(embeddings, dtype=np.float32))

Step 4: Query & perform search

When a user query arrives, the system will use the same model for the raw data to embed the query. To search for the most relevant vectors to the query, run `faiss_index.search(query_vector, k)`. This command will return similarity scores and the indices of the matched vectors. Then, these indices are compared against the original documents to identify which content is most relevant.

For example:

query = model.encode([“sample query text”])[0]

k = 5

distances, indices = index.search(np.array([query], dtype=np.float32), k)

What are The Common Use Cases for FAISS?

With all these capabilities, FAISS is widely applied in use cases where rapid searches and increased latency are paramount, like natural language processing, information retrieval, image search, and recommendation systems.

Information Retrieval

You can integrate FAISS into RAG (retrieval-augmented generation) pipelines to look for the essential information for knowledge-intensive tasks, like answering specialized questions. The library itself helps index text embeddings that were already converted by embedding models like BERT. When receiving a query vector, FAISS will quickly compare it with the embeddings of the stored chunks and identify which vectors have the most similar meaning in a vector space. For this reason, FAISS helps LLM-powered applications search for internal policies for employees, answer customer support questions, and more.

Image Search

FAISS supports image searches, especially in e-commerce. You can leverage the library to implement multimodal search beyond text, as long as you offer it the right embeddings. Accordingly, the library can look for product images that are embedded as vectors by embedding models like CLIP. This allows for instant visual searches.

Recommendation Systems

When a user’s preferences or browsing behavior are converted into embeddings, FAISS will store these vectors and implement similarity search within large datasets to find content (e.g., movies, music, or products) most similar and relevant to the user’s interests. This capability makes FAISS useful in building recommendation systems.

Deduplication

FAISS proves feasible in removing duplicates in your large datasets. The library will compare vectors and find near-duplicates that have extremely close means or nearly the same content. This helps human data analysts search for and eliminate duplicates faster.

Developing AI-Powered Applications with Designveloper

Designveloper is one of the leading software development and IT consulting companies in Vietnam. We’re an excellent team of 100+ skilled developers, designers, AI specialists, and other professionals. During more than 10 years of operations, we’ve implemented 200+ successful projects in different industries, from finance and healthcare to education and construction.

We master 50+ technologies, including LangChain, CrewAI, and AutoGen, to build both simple chatbots and complex agentic systems. Further, we don’t work toward a specific tech stack, but focus on a combination of the right technologies, from vector databases and embedding models to LLMs, to create complete, smart systems that streamline our clientele’s workflows and enhance user experiences. One of our notable projects is a product catalog-based advisory chatbot that uses Function Calling to automatically send confirmation emails to users, offer product information, and recommend the right products for user demands.

Contact us now and discuss your idea further, whether you want to build systems for information retrieval, product recommendations, or multimodal search.

FAQs:

Why is FAISS so fast?

FAISS speeds up similarity search as it’s designed for ANN (Approximate Nearest Neighbors) search instead of spending time comparing each stored embedding with a query vector.

What algorithm does FAISS use?

FAISS provides various algorithms for nearest-neighbor search. In particular, it uses brute-force flat search (IndexFlat) with distance metrics like Euclidean or inner product. Further, it offers algorithms like IVF (Inverted File Index), Product Quantization, or HNSW (Hierarchical Navigable Small World) to perform approximate search.

Does FAISS run locally?

Yes, FAISS is a Python/C++ library that operates locally on your device or server. It does not require cloud platforms and can support operations on both GPUs and CPUs. What you need to do is to download, install, and run it within your local environment or on-premise infrastructure.

Is FAISS in memory?

Yes, FAISS is designed to be an in-memory library that stores the indexes in RAM/VRAM. In other words, when you build or load an FAISS index, all embeddings and their data structures stay in your machine’s GPU or CPU memory. Searches take place directly in RAM/VRAM as well for high performance. Note that FAISS itself doesn’t function as a database. So, if you want to store the index for later use, you must explicitly save it to disk (serialize) and then load it back (deserialize).

Does FAISS support scalability?

Yes. But we want to come back to a few realities of FAISS to explain its scalability more clearly.

For cross-machine scalability

FAISS can process millions or even billions of vectors efficiently, but only on a single machine. This means that if your large data exceeds what a single server can handle, FAISS lacks the ability to automatically distribute data or search tasks to other servers for continuous processing. This is because FAISS is a library, not a full vector database.

The library supports diverse index types, vector-quantization methods, multi-threading, and GPU acceleration. Therefore, the library is an excellent option if:

- Your single machine has enough RAM/GPU memory and fast storage

- You want to search for the nearest neighbors fast and control the deployment environment

For this reason, FAISS alone is not sufficient if you want to build a production system that scales beyond a single machine. This system requires extra layers for effective deployments at scale, like:

- Your own code or external storage (for data persistent storage and recovery)

- Vector databases (for horizontal scaling and load balancing)

- Your own distributed architecture or API server for client access.

Accordingly, FAISS can be a component of the multi-machine, scalable system. To build a production-grade system with numerous nodes, you can use FAISS as the core search tool in each node. Then, link it with a vector database (e.g., Milvus, Pinecone, or Weaviate) or wrap it with your own distributed architecture/API server.

For scalability within a single machine

FAISS enables scalability as it offers different indexing techniques to sift through the most relevant information from small, medium-sized, and even huge datasets.

Suppose you start with Flat indexes for small datasets. When data volumes grow, you need to convert to other advanced indexing structures like IVF, PQ, or HNSW to handle massive datasets with high speed and less memory usage. But this means training and re-indexing your data, which is a waste of time.

Therefore, your company should consider scalability before building a FAISS index and adopt hybrid strategies like using a flat index for “hot” (frequently searched) data and IVF/PQ for the rest.