When working with large language models (LLMs), many people often simply give requests and wait for responses from the models. But sometimes, these responses don’t meet their expectations. Why? The answer often lies in the lack of effective prompt engineering.

Don’t let the so-called technical term “engineering” freak you out. You don’t need to be a software engineer, a data scientist, or anyone with technical knowledge to write effective prompts.

By understanding the inner workings of the LLMs and prompting techniques, you can ask the AI to return the desired responses.

So, how can you prompt the AI effectively? This is the main purpose of today’s blog post. Here, we will share the best tips and key techniques to help you become a prompting expert.

But what is prompt engineering, exactly? Don’t rush to these techniques. Let’s find out the basics of prompt engineering beforehand.

What Is Prompt Engineering?

When you ask Google Gemini, “What is the capital of France?”, that’s a prompt. Briefly, a prompt is an instruction that helps generative AI (GenAI) models know what they should do to produce desired responses.

A prompt can be a simple question or a complex request (e.g., “Please summarize the key points in the attached financial report.”).

When it comes to prompt engineering, it involves creating and adjusting high-quality prompts to guide GenAI models how to generate accurate and relevant outputs.

For effective prompt engineering, you have to understand how GenAI models work first. They’re natively prediction engines. They produce outputs by predicting the next likely words or phrases after the input and extracting what they’re trained on.

Therefore, prompt engineering can be understood as the iterative process of setting up GenAI models to predict the right sequence of tokens. This involves optimizing prompt length, considering a model’s preferred format, assessing a prompt’s writing tone, etc., until you find the best prompt.

Why Is Prompt Engineering Important in the Age of Generative AI?

In recent years, we’ve seen the non-stop rise of generative AI models, typically ChatGPT and Google Gemini (formerly Bard). These models bring various benefits, but also receive plenty of criticism for their inaccurate, insecure, or irrelevant responses.

However, we cannot blame the models for all these troubles.

Without understanding the inner mechanism of LLMs and writing effective prompts, you’ll fail to generate desired outputs. That’s why prompt engineering becomes more important than ever before.

By writing a prompt carefully, you can:

- Improve AI performance. A well-crafted prompt includes your intent, context, and preferred tone. This helps AI generate accurate, relevant responses aligned with a given task, whether for translating, summarizing, or writing new content.

- Save time and resources. When you prompt (instruct) AI models carefully, their responses are more precise and meaningful. This reduces the time, costs, and human resources needed to revise and refine the outputs.

- Keep outputs high-quality and consistent. You want to align outputs with your brand voice? A well-structured prompt helps you meet professional standards. Just include your favorite tone (e.g., humorous or professional) and wait for AI to reply.

- Control outputs better. Beyond brand voice, prompt engineering can help you control other elements of the outputs. They involve length, format (e.g., bullet points or paragraphs), relevant facts, citations, etc. Further, you can prompt the AI not to include confidential or offensive information, making outputs more secure and useful.

You need detailed instructions to work with any tool effectively. GenAI models are not an exception. With prompt engineering, you can enhance collaboration with these smart tools, make full use of their capabilities, and perform a task more effectively.

The Core Prompt Principles

GenAI models are increasingly adopted across fields. Their adopters – whether individuals or organizations – want them to generate as meaningful and accurate responses as possible. This boosts the demand for prompt engineering, with an estimated $671.38 million by 2026.

There’s no general formula to create effective prompts. Yet, a well-crafted prompt often revolves around the following principles:

Your prompts need to be clear and specific enough for AI to understand exactly what you want it to do.

Don’t say something like, “Tell me about climate change.” This prompt is too broad.

Instead, clarify the desired length, format, key points, writing style, and even the target audience. For example, “Write a 500-word essay explaining the main causes of climate change. Use engaging tone.”

With the same prompt, AI can return different answers based on the background information you give it.

Do you want to educate high school students about the impact of climate change or present this problem in front of NGO leaders? Do you want to build an online store for a tech startup or an organic food chain?

Giving context will keep AI-generated responses relevant and avoid off-topic answers.

Telling GenAI models who they should act as. This helps them give appropriate perspectives, tones, and expertise in alignment with a given task.

For example, “You’re a maths teacher. Explain vertex in simple terms for high school students.”

Prompt engineering is not a one-off process, but iterative.

Not all the time can you create perfect prompts. Therefore, create, refine, and adjust prompts until you obtain the desired outputs.

This fine-tuning process may involve adding examples, attaching relevant documents, providing facts, and modifying components like phrasing.

Common Types of Prompt Techniques

To prompt GenAI models effectively, experts suggest various prompting techniques. Each works best for different scenarios. Let’s take a look at what they are, prompt examples, and when to use them:

Zero-shot & Few-shot prompting

Zero-shot is the simplest prompting technique.

Accordingly, you require AI to perform a task in a simple prompt without giving any examples or background information.

Zero-shot works best when the task is clear enough without any further explanation. Some typical use cases include explaining definitions, generating creative ideas, summarizing reports, and translating documents.

Example: “What is a hurricane?”

Example: “Translating the following text from Finnish to English: Kävele sinne päin ja käänny ekasta risteryksestä oikeelle Puistotielle. Jatka sit vielä noin 100 metriä ettenpäin, ja meiän talo on se iso harmaa talo vasemmalla puolella.”

By contrast, few-shot means you need to provide some input-output pairs as examples so that AI can understand how to respond. This technique is ideal when your task requires consistent or specific formats and styles.

Example:

Input: “Hurricane” – Output: “Large, rotating storms, accompanied by heavy rains and thunderstorms.”

Input: “Flood” – Output: “Overflow of water onto dry land, mostly caused by heavy rains or coastal storms.”

Prompt: “Earthquake” – Response: “Sudden shaking of Earth’s surface, mainly caused by the movement of Earth’s outermost layers.”

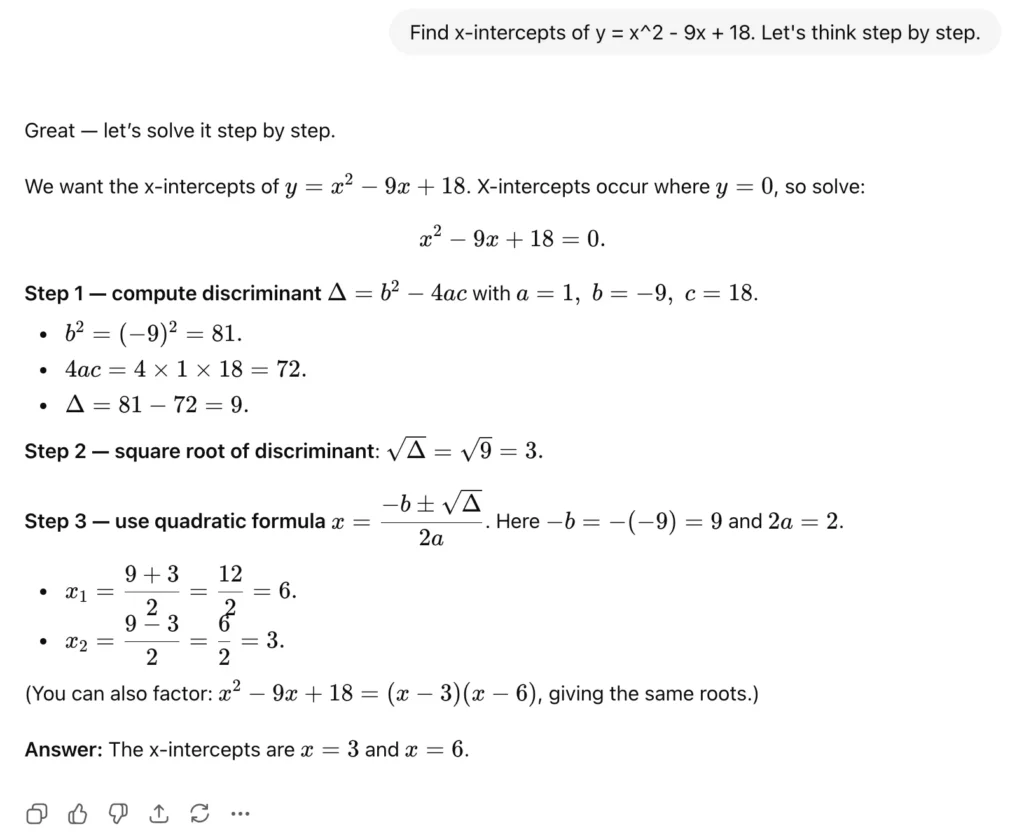

Chain-of-Thought prompting

As the name suggests, chain-of-thought (CoT) prompting allows AI to reason through a given problem and provide a solution step by step instead of giving a direct answer.

With CoT, you can enhance the reasoning capabilities of generative AI models to generate more correct answers. Besides, tracking each step helps you easily spot areas that make the final responses inaccurate and need improvement.

However, reasoning can take longer and consume more tokens, increasing overhead costs.

Therefore, don’t use CoT prompting for simple problems like “What is the answer to 9×10?”. Only apply this technique in tasks requiring logic breakdowns or multi-step solutions, typically complex maths problems.

So, how can you use CoT prompting? Just add a cue “Let’s think step by step” and AI will automatically reason through the problem.

Example:

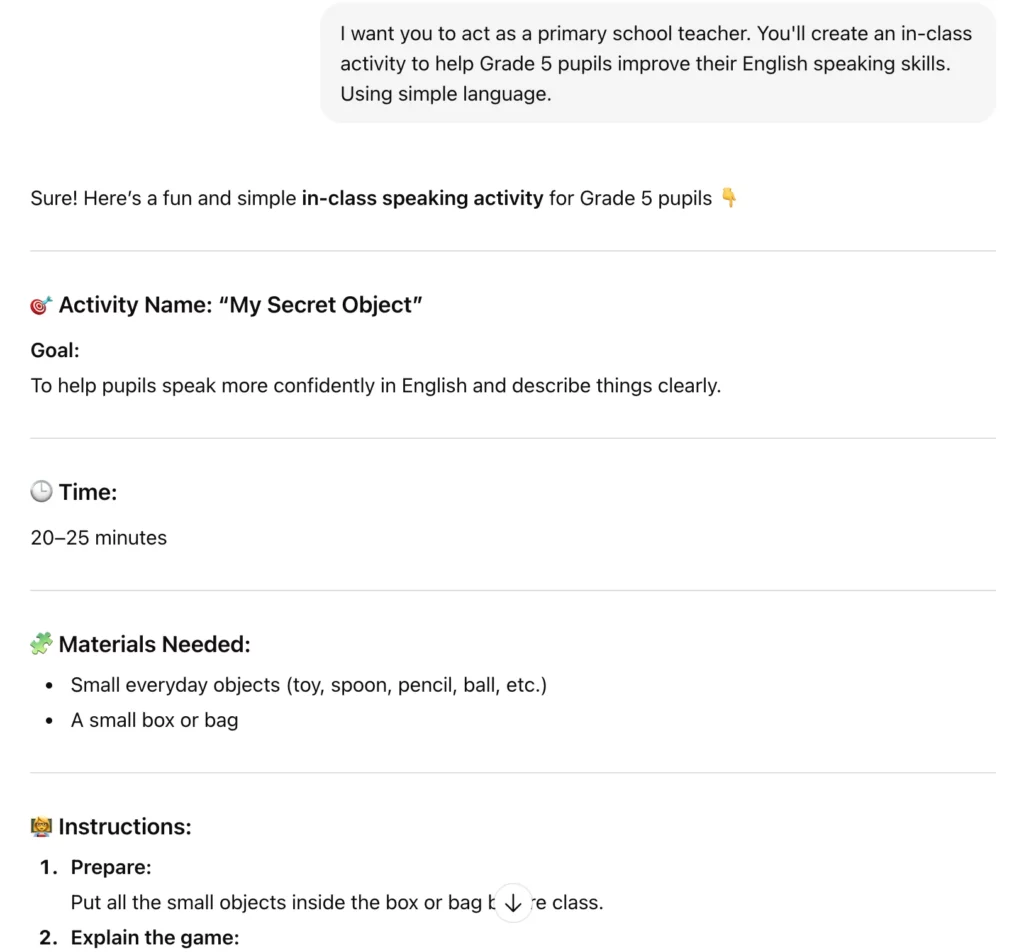

Role-based prompting

In this prompting technique, you’ll assign a GenAI model a specific role to act on a given task.

This helps the model generate outputs that align with the role’s expertise, style, and behavior. For this reason, the answers will be more relevant and informative, instead of being general.

For example, you can tell the AI to act as a primary school teacher, creating an in-class activity to improve the English speaking skills of Grade 5 pupils. Here’s what we got after asking AI to play this role:

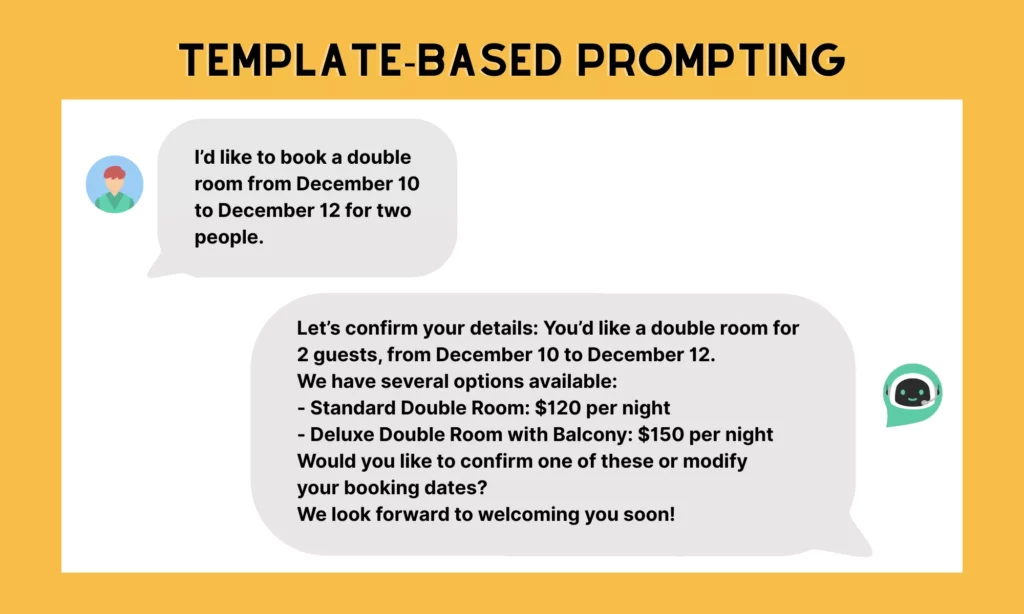

Template-based prompting

In template prompting, you’ll give the AI a structured and informative framework. This allows the model to generate truly essential information and avoid including irrelevant content.

Using templates to prompt the AI ensures a consistent style and tone in generated outputs. This prompting technique works best when you want to create repetitive or standardized content, like product descriptions, customer responses, FAQs, or reports.

Below is a template example to confirm a guest’s booking in alignment with the brand voice:

“Let’s confirm your details: You’d like to [Room_Type] for [Number_of_Guests], from [Checkin_Date] to [Checkout_Date]. We have several options available: [Options]. Would you like to confirm one of these or modify your booking dates? We look forward to welcoming you soon!”

Let’s see how we used this template to write a confirmation reply with AI’s assistance.

Prompt Engineering Use Cases and Examples

Whether you use AI to generate creative ideas, create images, or translate a document, prompt engineering is always important. Below are several cases, plus examples, where you can craft and fine-tune prompts to obtain meaningful, relevant, and accurate outcomes from AI:

Craft a prompt to answer different types of questions based on your intent and style. These questions include:

Yes/No questions: “Is ChatGPT an AI model?”

Open-ended questions: “What do you think is the main cause of the above-normal Atlantic hurricane season in 2025?”

Factual questions: “What is the highest mountain in the world?”

Multiple choice questions: “In which part of the ear were hair cells regenerated? a) organ of Corti, b) auditory nerve, c) Eustachian tube, d) semicircular canal”

Hypothetical questions: “What would happen if we could interact with aliens?”

Text generation is one of the most common use cases of generative AI models. Depending on your ultimate goals, you can clarify genre, key points, preferred style or tone, plus context and examples. This helps AI perform a given task in your expected way:

Creative writing: “Write a short story about a boy rescuing an injured cat. The story aims to educate preschoolers about values like kindness or friendship.”

Summarization: “Summarize the key points of the following news article on a healthy lifestyle.”

Translation: “Translate the following text from French to English: ‘La situation devenait difficile, non pour le condamné, mais pour les juges.’”

Beyond these common applications, you can craft prompts to guide AI-powered chatbots to respond to customers conversationally and maintain flow, and increase customer experiences.

Example: “You are a friendly app-based bot solving problems with shipment. Respond to a customer’s question: ‘My order is late.’”

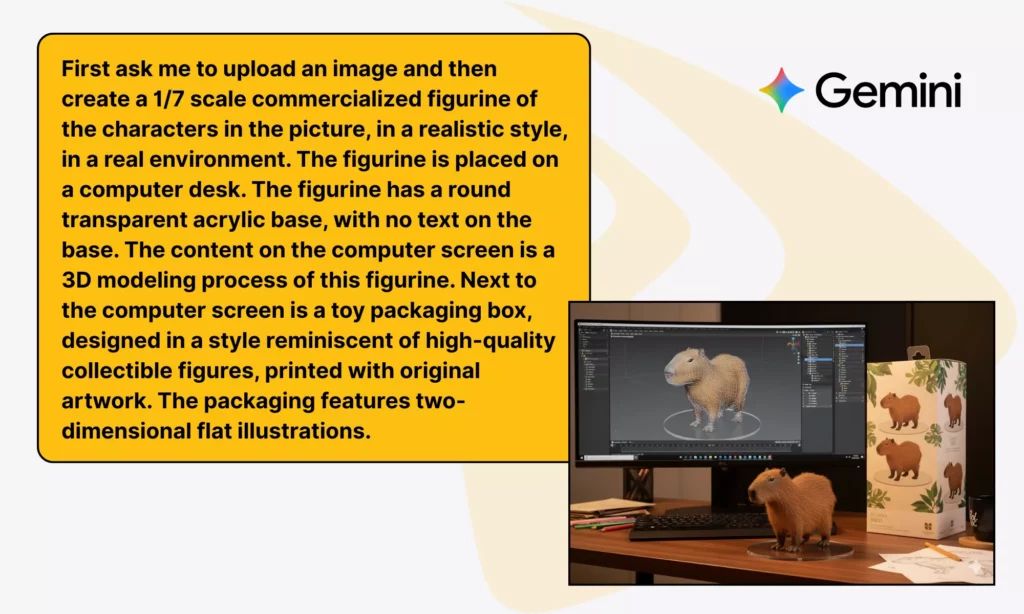

Design prompts that include your desired image’s main subjects, surrounding objects, scenery, lighting, style, and more. This helps you create photorealistic, artistic, or abstract images in your favorite way. Further, you can ask AI to edit images by changing backgrounds, removing unnecessary objects, etc.

GenAI models, like Google Gemini, provide available prompts that you can refine and adjust to fit your requirements.

Example:

Provide AI with detailed prompts to suggest, translate, optimize, and debug code. Here are some examples:

| Action | Example |

| Code completion | You are an experienced Python developer. Complete the following function that calculates the factorial of a number using recursion. def factorial(n): return 1 |

| Code translation | Translate this Python function into JavaScript. Python code: def greet(name): |

| Code optimization | Optimize the following Python code for better performance and readability, and explain what changes you made. Code: numbers = [i for i in range(1000000)] |

| Code debugging | Find and fix the bug in this Python code. Then explain what caused the error. Code: def divide(a, b): |

The Future of Prompt Engineering

Generative AI models provide humans across industries with various benefits, especially in data-intensive tasks.

However, LLMs don’t work like human brains. They observe their training data and predict what’s most likely to appear in the text sequences.

This leads to various limitations, typically fabricating answers and involving sensitive information in their responses.

AI is not like humans who are under regulatory control and have to bear responsibility for wrongdoings. So prompt engineering is necessary to avoid these problems.

When GenAI models thrive, prompt engineering grows accordingly.

Beyond prompt engineering guide, lots of tools are produced to support prompt crafting. Some typical examples include Prompt Builder ( Salesforce, Inc.), Amazon Bedrock (AWS), Lilypad, and Mirascope.

In the future, we predict prompt engineering will become a necessary skill for any AI user.

It doesn’t demand technical background. But it requires you to identify what you want to achieve with AI models.

This helps you clarify your writing style, essential information (e.g., facts), and more. This enables AI to generate more relevant and precise outputs to a given task.

Further, each company, with different working styles, also develops its own instructions based on the fundamental prompt engineering guide. For this reason, they can take full advantage of AI models and perform tasks best.

Conclusion

We believe that after this blog post, you better understand the importance of prompt engineering in the generative AI era.

Once you still ‘collaborate’ with GenAI models to streamline your work, designing effective prompts is unavoidable.

However, prompts may not be perfect at first time. That’s why refining and adjusting them iteratively helps generate better-quality outputs. Besides, using good prompts, you can avoid AI’s inherent problems, like hallucination.

Do you have any experience with prompt engineering to share with us? Tell your stories with us on Facebook, X, and LinkedIn! And, subscribe to our blog posts to get more tips about prompt engineering!