Unlike traditional AI models that respond to single prompts (like ChatGPT’s basic Q&A mode), AI agents can plan, reason, and execute multi-step tasks by interacting with tools, data sources, APIs, or even other agents.

Sounds abstract? That’s because it is. While most might agree with this definition or expectation for what agentic AI can do, it is so theoretical that many AI agents available today wouldn’t make the grade.

As my colleague Sean Falconer noted recently, AI agents are in a “pre-standardization phase.” While we might broadly agree on what they should or could do, today’s AI agents lack the interoperability they’ll need to not just do something, but actually do work that matters.

Think about how many data systems you or your applications need to access on a daily basis, such as Salesforce, Wiki pages, or other CRMs. If those systems aren’t currently integrated or they lack compatible data models, you’ve just added more work to your schedule (or lost time spent waiting). Without standardized communication for AI agents, we’re just building a new type of data silo.

No matter how the industry changes, having the expertise to turn the potential of AI research into production systems and business results will set you apart. I’ll break down three open protocols that are emerging in the agent ecosystem and explain how they could help you build useful AI agents—i.e., agents that are viable, sustainable solutions for complex, real-world problems.

The current state of AI agent development

Before we get into AI protocols, let’s review a practical example. Imagine we’re interested in learning more about business revenue. We could ask the agent a simple question by using this prompt:

Give me a prediction for Q3 revenue for our cloud product.

From a software engineering perspective, the agentic program uses its AI models to interpret this input and autonomously build a plan of execution toward the desired goal. How it accomplishes that goal depends entirely on the list of tools it has access to.

When our agent awakens, it will first search for the tools under its /tools directory. This directory will have guiding files to assess what is within its capabilities. For example:

/tools/list

/Planner

/GenSQL

/ExecSQL

/Judge

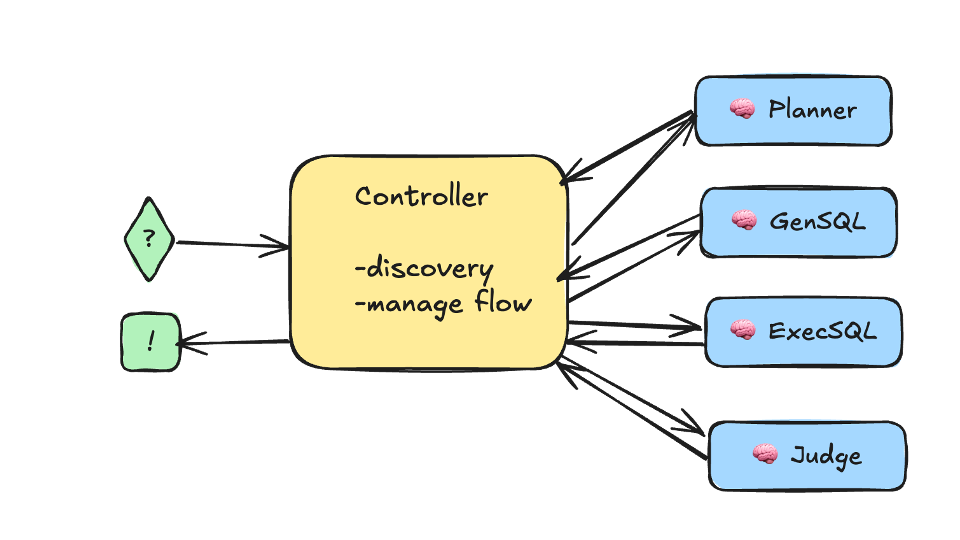

You can also look at it based on this diagram:

Confluent

The main agent receiving the prompt acts as a controller. The controller has discovery and management capabilities and is responsible for communicating directly with its tools and other agents. This works in five fundamental steps:

- The controller calls on the planning agent.

- The planning agent returns an execution plan.

- The judge reviews the execution plan.

- The controller leverages GenSQL and ExecSQL to execute the plan.

- The judge reviews the final plan and provides feedback to determine if the plan needs to be revised and rerun.

As you can imagine, there are multiple events and messages between the controller and the rest of the agents. This is what we will refer to as AI agent communication.

Budding protocols for AI agent communication

A battle is raging in the industry over the right way to standardize agent communication. How do we make it easier for AI agents to access tools or data, communicate with other agents, or process human interactions?

Today, we have Model Context Protocol (MCP), Agent2Agent (A2A) protocol, and Agent Communication Protocol (ACP). Let’s take a look at how these AI agent communication protocols work.

Model Context Protocol

Model Context Protocol (MCP), created by Anthropic, was designed to standardize how AI agents and models manage, share, and utilize context across tasks, tools, and multi-step reasoning. Its client-server architecture treats the AI applications as clients that request information from the server, which provides access to external resources.

Let’s assume all the data is stored in Apache Kafka topics. We can build a dedicated Kafka MCP server, and Claude, Anthropic’s AI model, can act as our MCP client.

In this example on GitHub, authored by Athavan Kanapuli, Akan asks Claude to connect to his Kafka broker and list all the topics it contains. With MCP, Akan’s client application doesn’t need to know how to access the Kafka broker. Behind the scenes, his client sends the request to the server, which takes care of translating the request and running the relevant Kafka function.

In Akan’s case, there were no available topics. The client then asks if Akan would like to create a topic with a dedicated number of partitions and replication. Just like with Akan’s first request, the client doesn’t require access to information on how to create or configure Kafka topics and partitions. From here, Akan asks the agent to create a “countries” topic and later describe the Kafka topic.

For this to work, you need to define what the server can do. In Athavan Kanapuli’s Akan project, the code is in the handler.go file. This file holds the list of functions the server can handle and execute on. Here is the CreateTopic example:

// CreateTopic creates a new Kafka topic

// Optional parameters that can be passed via FuncArgs are:

// - NumPartitions: number of partitions for the topic

// - ReplicationFactor: replication factor for the topic

func (k *KafkaHandler) CreateTopic(ctx context.Context, req Request) (*mcp_golang.ToolResponse, error) {

if err := ctx.Err(); err != nil {

return nil, err

}

if err := k.Client.CreateTopic(req.Topic, req.NumPartitions, req.ReplicationFactor); err != nil {

return nil, err

}

return mcp_golang.NewToolResponse(mcp_golang.NewTextContent(fmt.Sprintf("Topic %s is created", req.Topic))), nil

}

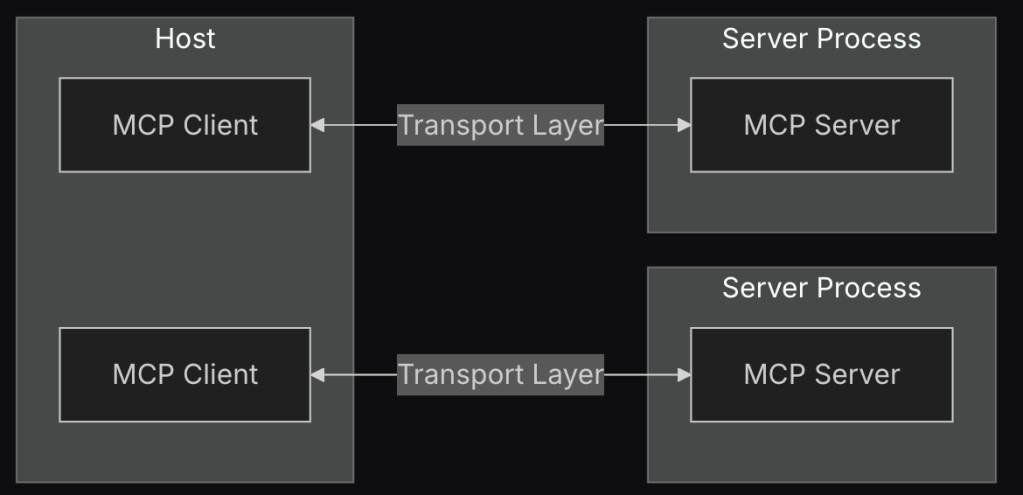

While this example uses Apache Kafka, a widely adopted open-source technology, Anthropic generalizes the method and defines hosts. Hosts are the large language model (LLM) applications that initiate connections. Every host can have multiple clients, as described in Anthropic’s MCP architecture diagram:

Anthropic

An MCP server for a database will have all the database functionalities exposed through a similar handler. However, if you want to become more sophisticated, you can define existing prompt templates dedicated to your service.

For example, in a healthcare database, you could have dedicated functions for patient health data. This simplifies the experience and provides prompt guardrails to protect sensitive and private patient information while ensuring accurate results. There is much more to learn, and you can dive deeper into MCP here.

Agent2Agent protocol

The Agent2Agent (A2A) protocol, invented by Google, allows AI agents to communicate, collaborate, and coordinate directly with each other to solve complex tasks without frameworks or vendor lock-in. A2A is related to Google’s Agent Development Kit (ADK) but is a distinct component and not part of the ADK package.

A2A results in opaque communication between agentic applications. That means interacting agents don’t have to expose or coordinate their internal architecture or logic to exchange information. This gives different teams and organizations the freedom to build and connect agents without adding new constraints.

In practice, A2A requires that agents are described by metadata in identity files known as agent cards. A2A clients send requests as structured messages to A2A servers to consume, with real-time updates for long-running tasks. You can explore the core concepts in Google’s A2A GitHub repo.

One useful example of A2A is this healthcare use case, where a provider’s agents use the A2A protocol to communicate with another provider in a different region. The agents must ensure data encryption, authorization (OAuth/JWT), and asynchronous transfer of structured health data with Kafka.

Again, check out the A2A GitHub repo if you’d like to learn more.

Agent Communication Protocol

The Agent Communication Protocol (ACP), invented by IBM, is an open protocol for communication between AI agents, applications, and humans. According to IBM:

In ACP, an agent is a software service that communicates through multimodal messages, primarily driven by natural language. The protocol is agnostic to how agents function internally, specifying only the minimum assumptions necessary for smooth interoperability.

If you take a look at the core concepts defined in the ACP GitHub repo, you’ll notice that ACP and A2A are similar. Both have been created to eliminate agent vendor lock-in, speed up development, and use metadata to make it easy to discover community-built agents regardless of the implementation details. There is one crucial difference: ACP enables communication for agents by leveraging IBM’s BeeAI open-source framework, while A2A helps agents from different frameworks communicate.

Let’s take a deeper look at the BeeAI framework to understand its dependencies. As of now, the BeeAI project has three core components:

- BeeAI platform – To discover, run, and compose AI agents;

- BeeAI framework – For building agents in Python or TypeScript;

- Agent Communication Protocol – For agent-to-agent communication.

What’s next in agentic AI?

At a high level, each of these communication protocols tackles a slightly different challenge for building autonomous AI agents:

- MCP from Anthropic connects agents to tools and data.

- A2A from Google standardizes agent-to-agent collaboration.

- ACP from IBM focuses on BeeAI agent collaboration.

If you’re interested in seeing MCP in action, check out this demo on querying Kafka topics with natural language. Both Google and IBM released their agent communication protocols only recently in response to Anthropic’s successful MCP project. I’m eager to continue this learning journey with you and see how their adoption and evolution progress.

As the world of agentic AI continues to expand, I recommend that you prioritize learning and adopting protocols, tools, and approaches that save you time and effort. The more adaptable and sustainable your AI agents are, the more you can focus on refining them to solve problems with real-world impact.

Adi Polak is director of advocacy and developer experience engineering at Confluent.

—

Generative AI Insights provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss the challenges and opportunities of generative artificial intelligence. The selection is wide-ranging, from technology deep dives to case studies to expert opinion, but also subjective, based on our judgment of which topics and treatments will best serve InfoWorld’s technically sophisticated audience. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Contact doug_dineley@foundryco.com.