RAG (Retrieval-Augmented Generation) is one of the most effective approaches to improve LLM-generated answers. By connecting the model to external sources (e.g., databases, manuals, or PDFs), RAG grounds the LLM in factual, up-to-date information, making its answers more reliable and accurate. A simple RAG system works simply by retrieving the most relevant documents based on a query and using them to produce context-aware answers. However, due to its inherent limitations, the simple system isn’t applicable in practice. That’s why various companies have shifted their focus to advanced RAG. So, what is advanced RAG, exactly? How can advanced RAG help your business overcome the challenges of traditional systems? Keep reading and explore every must-know aspect of this approach with Designveloper!

What is Advanced RAG?

An advanced RAG system often goes beyond the basic setup, which primarily covers simple document retrieval and response generation. It adds sophisticated components and techniques to the pipeline, aiming to make retrieval and generation smarter, more useful, and more reliable in real-world use cases.

Below are some notable aspects of advanced RAG you often see in practice:

- Rewrites or expands a user’s query to make it clearer for the retriever to interpret and search for the stored documents closer to the user’s intent.

- Adds more retrieval layers to fine-tune its searches.

- Fetches information from different sources at once and synthesizes results before delivering them to the LLM.

- Handles and fuses different types of data, including structured, unstructured, and even multimodal data.

- Reranks and filters the most relevant documents to prioritize those closest to the user’s query, instead of only using the top N search results.

- Integrates past interactions and user profiles (e.g., personal information and preferences) for tailored, context-aware retrieval.

- Adds evaluation techniques, like LLM Guardrail and LLM Judge, to improve the reliability of responses and reduce hallucinations.

Why Advanced RAG Matters?

Advanced RAG matters since it handles the natural limitations of simple or naive RAG.

Limitations of Naive RAG

As we already know, a simple RAG system receives a user’s query, performs similarity searches in a vector store, retrieves the top-N documents once, and generates context-aware answers. Sounds simple, right?

But several real-world challenges make this simple approach impossible and useless in various use cases. In reality, user queries may be too vague or unclear. For example, when a user says, “Show me paid annual leave,” the system may not understand whether he wants to know about the company’s paid annual leave policy or how much leave he has left.

Besides, a company’s data in various forms is often scattered across different sources, instead of being gathered in a unified place. However, a simple RAG system lacks the capabilities to retrieve data from multiple sources at once.

Another problem of naive RAG is its inability to retrieve session history and user profiles to produce personalized answers. Additionally, nothing ensures that one-time retrieval enables the system to pull out all the most pertinent information (without being blended with irrelevant data). These limitations make simple RAG fail to solve many cases, especially business-critical tasks (e.g., medical diagnosis or legal advisory). That’s why advanced RAG comes in.

The power of advanced RAG

Advanced RAG helps the system process complex, ambiguous queries, interpret nuanced context, and work with different data types. By adopting advanced techniques (like query transformation, re-ranking, or fast-checking) throughout the RAG pipeline, you can:

- Pull the truly relevant documents through multi-layered retrieval to generate more precise and trustworthy responses.

- Handle diverse domains, data types, and retrieval sources at scale, which is essential when a company’s search demand or databases increase.

- Minimize the likelihood of fabricating answers through guardrails, cross-validation, and other optimization techniques.

- Make answers closer to end users by fusing session history, user profiles, and other context into the LLM’s responses.

- Ensure compliance and avoid legal or reputational risks by enabling hybrid searches (keyword matches + semantic searches), applying guardrails (e.g., redacting sensitive data before retrieval), and avoiding generic responses.

Besides, different advanced RAG architectures bring diverse benefits. Two common RAG advancements now include multimodal RAG and Knowledge Graph.

- Multimodal RAG allows enterprises to incorporate diverse sources, like images, audio, and structured data, to enrich contextual grounding, improve generation quality, and enhance reasoning.

- Knowledge Graph RAG, or GraphRAG, integrates a knowledge graph into retrieval, hence improving the LLM’s capability of creating more accurate and contextual responses. GraphRAG requires 26% to 97% fewer tokens, hence enabling greater efficiency and reducing compute power. It also recorded higher accuracy and lower response times (with an exceptional 86.31% on the RobustQA benchmark) than traditional RAG approaches.

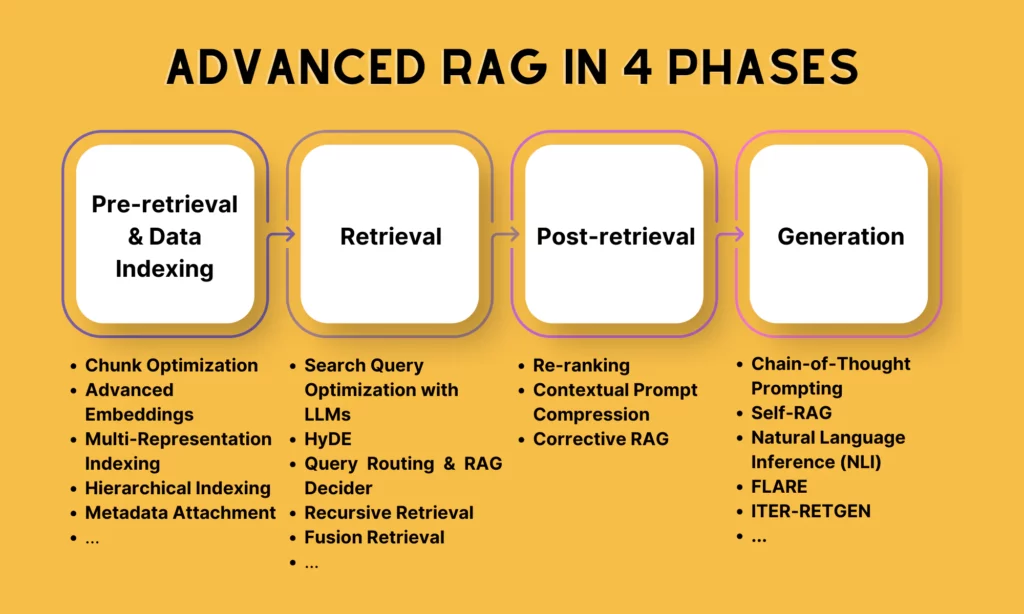

Classifying Advanced Techniques in RAG

Advanced RAG techniques are fed into each processing stage (data indexing, retrieval, post-retrieval, and generation) to improve the accuracy and efficiency of the whole system. Now, we’ll dive deep into each phase to see how advanced techniques are adopted to optimize the entire RAG process:

Pre-Retrieval and Data-Indexing Techniques

These techniques aim to prepare and pre-process data so that later retrieval can work faster, more accurately, and in alignment with a user’s true intent. They focus on cleaning, organizing, and improving data before it’s stored in a vector database.

- Chunk Optimization – Modify chunk sizes and overlaps to balance context and the LLM’s token limits.

- Advanced Embeddings – Leverage strong embedding models (e.g., E5 or BGE-large) to encode sentence-level or document-level meaning effectively. These models also work well across languages and can be fine-tuned for your specific domain, hence improving retrieval accuracy.

- Multi-Representation Indexing – Create and store alternative versions of documents within the retrieval system for faster extraction, especially when your app often handles complex queries, accesses diverse document formats, and requires deeper semantic understanding.

- Hierarchical Indexing – Structure data in layers (summaries/overviews → details). This technique allows your system to search for relevant sections from high-level summaries first and then navigate down to more detailed chunks, leading to better context understanding and more accurate outputs.

- Metadata Attachment – Add tags (e.g., author, date, or type) to improve filtering accuracy.

- Information Density with LLMs – Clean raw data by using LLMs to summarize and label the information without losing its original meaning. This reduces noise and keeps only the crucial information, hence enhancing the accuracy of downstream tasks.

- Deduplication & Cleaning – Remove redundant data and outdated information to minimize noise and improve data granularity.

- Multi-indexing Strategies – Use strategies like sentence-window retrieval or auto-merging retrieval to fetch more relevant context.

Retrieval Techniques

These advanced RAG techniques focus on processing queries to make them clearer for the system to interpret and improving retrieval to find the most relevant information. They include:

- Search Query Optimization with LLMs – Increase the quality of a user’s query to enhance retrieval quality. Several common methods include rephrasing or expanding queries, creating sub-queries for complex issues, and understanding the query’s context deeply.

- HyDE (Hypothetical Document Embeddings) – Create a hypothetical answer or document for a query, embed the generated document instead of the original query, and use the embedding to retrieve the most relevant documents. This handles query-document asymmetry.

- Query Routing & RAG Decider – Route queries to the right database and identify when to extract or let the LLM answer independently. These techniques, therefore, increase retrieval efficiency and preserve resources from unnecessary retrieval.

- Router Retrieval – Dynamically choose the best data source or search method.

- Recursive Retrieval – Perform follow-up queries iteratively for deeper data discovery.

- Auto Retrieval – Automatically create metadata filters or queries by using LLMs.

- Fusion Retrieval – Use algorithms like RRF (Reciprocal Rank Fusion) to break the original query into different sub-queries, perform separate searches for each, and combine various extracted results.

- Auto Merging Retrieval – Build a hierarchical structure of “parent” (unified versions of related chunks) and “child” (smaller chunks) nodes, giving the LLM a larger, consistent context.

- Fine-tuned embeddings – Tailor embeddings for domain-specific retrieval.

- Dynamic embeddings – Modify embeddings based on query context to offer more nuanced context understanding.

- Hybrid Search – Combine semantic search and keyword matches.

Post-Retrieval Techniques

When documents are retrieved, nothing ensures that they’re the most relevant to a user’s query. That’s why post-retrieval techniques help filter, re-rank, and compress these documents before sending them to the LLM. This helps the model identify the most useful context.

- Re-ranking – Reorganize initially extracted documents based on their relevance to a query.

- Contextual Prompt Compression – Filter and compress retrieved data to remove unnecessary or excess content, allowing the LLM to focus on the most crucial details before fusing them into the final prompt.

- Corrective RAG – Use a self-correction mechanism that enables the LLM to self-assess its own outputs and improve them iteratively.

Generation Techniques

These techniques in the generation phase help the LLM to produce a context-aware, factually grounded answer based on the retrieved information. They aim to make outputs more precise, convincing, and even self-correcting.

- Chain-of-Thought Prompting – Guide the LLM to reason through irrelevant and noisy context, enhancing response accuracy.

- Self-RAG – Use a critique mechanism to assess the factual accuracy, relevance, and usability of generated outputs.

- LLM Fine-Tuning – Train the LLM to ignore irrelevant data.

- Natural Language Inference (NLI) – Cross-check extracted data against generated responses to avoid hallucinations.

- FLARE (Flexible Retrieval Triggering) – Allow the LLM to proactively decide whether it needs extra retrieval mid-generation.

- Tree of Clarifications (ToC) – Handle multi-faceted or ambiguous questions by finding various possible meanings of a query, arranging them in a tree structure, and extracting supporting evidence before answering.

- ITER-RETGEN (Iterative Retrieval-Generation) – Refine retrieval and generation iteratively to enhance response accuracy and relevance.

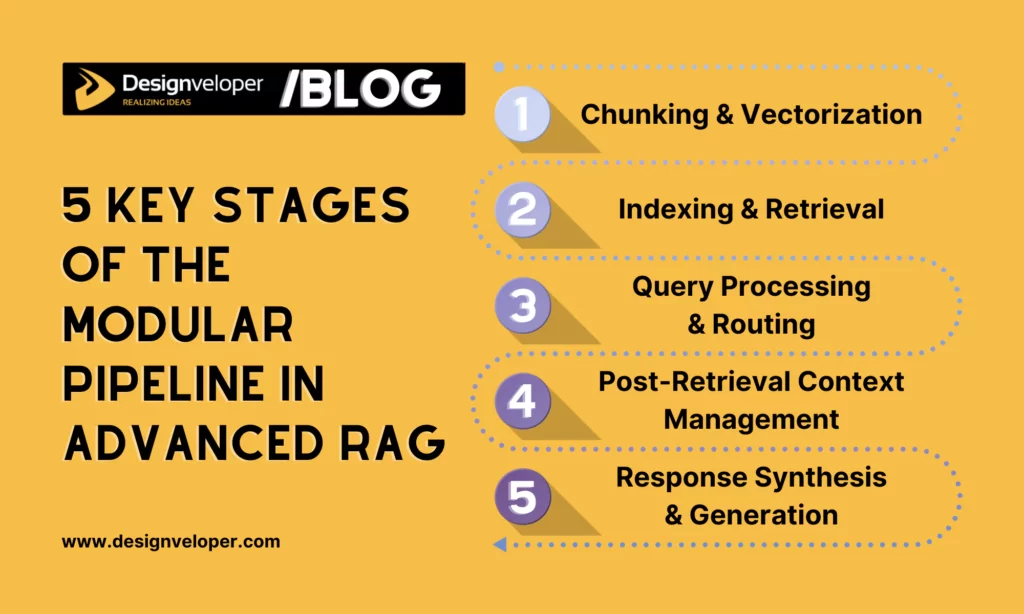

5 Key Stages of the Modular Pipeline in Advanced RAG Explained

You’ve understood the importance of advanced RAG and its common techniques to improve the system’s retrieval and generation. It’s time to see how you can build an advanced RAG system using these sophisticated components. This modular pipeline includes five key phases, from chunking and vectorizing documents to merging results and generating suitable responses.

Stage 1. Chunking & Vectorization

The first stage involves pre-processing external data sources from which LLMs or transformer models (e.g., BERT or OpenAI ada) will absorb and retrieve.

First, long documents need chunking. As the models have a maximum input length (called context window and measured in tokens), they can’t handle too long documents. That’s why splitting them into smaller and semantically coherent chunks is essential. However, the chunk size is a big concern. A small chunk will create a numerical vector that closely reflects its meaning, but possibly lose broader context. Meanwhile, a too-large chunk makes an embedding generic, dilutes meaning, and slows down retrieval.

The sweet spot here may depend on different factors, like an embedding model’s token capacity, document types, and retrieval strategies. But you can adopt advanced techniques to improve chunking, like semantic chunking, sliding window, and overlapping. These techniques keep important context across chunks and mitigate information fragmentation.

Further, you should enrich chunks with metadata (like author, date, topic tags, or source). These enrichments help the system filter out irrelevant, outdated information and improve accuracy.

Next, every chunk is converted into a vector representation using embedding models. By selecting the right search optimized model (e.g., OpenAI, Cohere, or BGE, depending on your specific use cases), you can improve the system’s ability to capture semantic similarity.

Stage 2. Indexing & Retrieval

Next, you’ll adopt advanced techniques to improve the capabilities of search indices (“vector stores” or “vector databases”) and retrieval. This allows the system to fetch relevant documents and chunks efficiently.

Vector Stores & Hierarchical Indices

In naive RAG, the system will search for the documents whose vectors are closest to the query’s embeddings. This approach works well for small datasets, but doesn’t scale with the increasing search demands. Therefore, in large-scale RAG projects, you should combine vector stores with ANN (Approximate Nearest Neighbor) algorithms like FAISS, HNSW, Annoy, or NMSLIB to search through millions of chunk vectors efficiently.

Further, consider employing managed vector databases like Chroma, Pinecone, or Weaviate. These stores enable ANN search, metadata filtering, and scalability, hence making retrieval scalable and fast.

Additionally, using multi-level (hierarchical) retrieval also increases accuracy while saving compute. This technique allows the system to find a summary index and continue searching only inside the retrieved chunks.

Hypothetical Questions (HyDE) & Synthetic Queries

A user query is often short, while a document chunk is often long. In naive RAG implementation, when you compare the vectors of the short query and the long chunk, they don’t always fit well semantically. This leads to the “asymmetry” phenomenon. That’s why HyDE comes in to handle this problem. Instead of directly embedding the query, you ask the LLM to create a hypothetical document to the query (“synthetic query”). Then, embed this generated document and use the vector to extract relevant information.

Hybrid Search & Fusion Retrieval

Hybrid search is an ideal option for complex tasks that require exact keyword matches and semantic understanding. But the challenge is that keyword-based methods (BM25) and vector search create results with different scoring systems, making it hard to rank the results fairly.

A common solution is using the RRF (Reciprocal Rank Fusion) algorithm to merge results from both methods into one coherent list. Tools like LangChain’s Ensemble Retriever and LlamaIndex’s hybrid retrievers support ranking strategies like RRF to implement hybrid search efficiently and improve ranking quality.

Stage 3. Query Processing & Routing

Sometimes, the reason for poor-quality responses doesn’t come from the quality of external documents and retrieval accuracy, but from the query itself. A user’s query can be vague or too complex. That’s why query optimization is essential for the system to better understand the user’s true intent.

Query Expansion & Transformation Techniques

The RAG pipeline uses an LLM to rewrite, expand, or transform the query before searching. Several common techniques include:

- Query Rewriting: Reformulates the query in a clearer way. For example, the query “LangChain popularity GitHub stars” can be rewritten to “How many GitHub stars does LangChain currently have?”

- Sub-Query Decomposition: Split a complex query into smaller ones. Retrieval is accordingly run on each sub-query, and the system then merges all the retrieved results. Tools like LangChain’s MultiQueryRetriever or LlamaIndex’s Sub-Question Query Engine help you handle this task.

- Step-Back Prompting: Expand the query to capture more useful context.

- Reference Citations: Ensure responses can link back to their sources.

Query Routing Across Multiple Retrievers/Indices

Different queries contain different intents. By employing query routers, the RAG system may decide what to do next and where to send a query. For instance, if a user wants to summarize customer feedback on shipping delays, the system will route the query to the right index (e.g., a vector store), pull out relevant information, and summarize results. Several RAG frameworks now support query routing, like LangChain’s Multi-Query Retriever or LlamaIndex’s QueryRouter.

Use of Agents in Retrieval Paths

Integrating agents into the retrieval process makes your RAG more flexible and adaptable. In particular, these LLM-powered agents can dynamically decide how to process a user query (e.g., decomposing), what to retrieve, from which indices, and how to merge results. Today’s common RAG frameworks (e.g., LangChain or LlamaIndex) assist with creating agents to chain different actions within the pipeline or even combine multi-step reasoning across retrieval systems.

Stage 4. Post-Retrieval Context Management

Raw retrieved chunks can contain repeated or irrelevant information. Further, as LLMs have max tokens, you can’t throw everything the system found in the prompt. Therefore, after retrieval, you need a refinement step to ensure the context is clean, concise, and useful. Below are several techniques you can adopt in this phase:

Auto-merging, Deduplication & Noise Filtering

Your raw list of retrieved chunks may have some problems. For example, one chunk may end mid-sentence, and another starts with the same sentence. So, before fusing them into a prompt, the system can remove duplicates and even text (e.g., headers or disclaimers) that doesn’t offer much value before automatically merging them smoothly. Some RAG frameworks support deduplication, noise filtering, and auto-merging through tools, like LlamaIndex’s NodePostprocessor classes (e.g., SimilarityPostprocessor or DuplicateRemoverPostprocessor).

Context Window Management & Late Chunking

The language models have max tokens, and if you extract more text than that context window, the system will dynamically decide what to keep or remove and re-break down the chunks (“late-junking”) if needed to capture context efficiently. You can leverage some tools like LlamaIndex’s SentenceWindowRetriever and ContextWindowPostprocessor to manage window packing.

Contextual Reranking & Critique-Driven Filtering

Once you’ve had a raw list of retrieved documents, you can rerank them to surface the most relevant ones. This is done with different tools like LlamaIndex’s Postprocessors or LangChain’s rerankers (e.g., CohereRerank or cross-encoders from Hugging Face).

Stage 5. Response Synthesis & Generation

Finally, the LLM receives the refined context and puts it, along with the original query, into the prompt to generate the final response.

Prompt Engineering for Structured Outputs

Without guidance, the language models can create free-flowing answers, which don’t meet your expectations. Therefore, you need to carefully design good prompts that require the model to return summaries, bullet points, formatted outputs, or any form you want. Besides, these prompts can help the LLM control the response’s length and guide it to say “I don’t know” or “Sorry, I can’t help you with this problem” if there’s not enough evidence to back up. Some RAG frameworks, like LangChain or LlamaIndex, provide customizable prompt templates and also allow you to create your own.

In-Context Learning Strategies for LLMs

You can guide the LLM to reason by using examples inside the prompt. Some advanced techniques you can adopt for in-context learning include:

- Few-Shot Prompting – Provide examples of questions and good answers.

- Chain-of-Thought Prompting – Enable the LLM to simulate structured reasoning by teaching it reason step by step.

- Scaffolded Reasoning – Instruct the LLM to think through intermediate reasoning steps. This reduces hallucinations, ensures coverage of all important details, and enables easier debugging, especially when the system has to handle complex queries.

Tools like LangChain’s chains (map_reduce) or FewShotPromptTemplate help the system with reasoning.

Response Synthesizer Modules (Multi-Stage LLMs)

Your RAG system can go through various stages of LLMs to fine-tune retrieved context and produce better answers.

1. Send multiple chunks of retrieved context to the model to iteratively fine-tune the response.

2. Summarize the retrieved context to fit the prompt.

3. Produce various answers for different context chunks and summarize or merge them.

In LangChain, you can build an orchestration of chains or agents that perform multi-LLM flows. Haystack also supports chaining nodes through the Pipeline abstraction.

Key Use Cases of Advanced RAG in the Enterprise

Advanced RAG helps enterprises handle various complex Q&A tasks, domain-specific queries, scattered data sources, and personalized recommendations.

Complex Q&A Tasks

If responses require more than simple retrieval, from filtering and re-ranking to query routing and agent reasoning, Advanced RAG is a better option. This is because these sophisticated techniques can help fine-tune search results, remove irrelevant information, and focus on only the most accurate content.

They also enable the system to go through multiple reasoning steps, get in-depth insights from various documents, and offer responses that reflect both context and intent. This helps enterprises produce more accurate, reliable, and context-aware answers for employees, customers, and decision-makers.

For example, advanced RAG enables executives and managers to sift through not only structured data but also unstructured documents (e.g., reports or emails) to gain context-aware insights for decision-making.

Domain-Specific Queries

Advanced RAG is especially useful in strictly regulated industries, like healthcare, law, and finance, where precision and compliance matter.

For example, traditionally, legal or compliance teams must manually review contracts, policies, and transactions against evolving compliance rules. This process is slow and error-prone.

But advanced RAG enables domain-specific retrieval to scan across legal documents, internal policies, and regulations to filter and re-rank content based on compliance requirements, hence identifying only risky causes and surfacing the most relevant content. This helps organizations reduce compliance risks, lower legal review costs, and prepare for audits more efficiently.

Scattered Data Sources

Many companies often store knowledge in scattered sources, like internal databases, wikis, emails, or PDFs. Searching through these sources manually is a waste of time, and results may not be complete or accurate.

Advanced RAG accelerates this process by retrieving data from different sources and adopting sophisticated techniques (e.g., chunk optimization, advanced embeddings, hybrid retrieval, query routing, and reranking). By doing so, employees can access precise knowledge instantly, avoiding duplicated work and decision delays.

Personalized Recommendations

Advanced RAG combines external knowledge, past interactions, and user profiles to pull content that matches a user’s true intent. This allows the system to offer personalized responses to users, making advanced RAG helpful in areas like customer support in e-commerce or content platforms.

Limitations and Risks of Using Advanced RAG Systems

Enterprises can gain a lot from advanced RAG. However, they also have to face unexpected challenges from these sophisticated techniques.

Complexity & Maintenance Overhead

A simple RAG system is already considered complex due to its various components. In advanced RAG implementation, this complexity even increases exponentially depending on which components (e.g., multiple retrieval methods, query routers, agents, or postprocessors). This makes it hard to debug and maintain the RAG system. If any failure occurs in any layer (e.g., retrieval or routing), it’s hard to track.

Data Security & Compliance

The complexity of advanced RAG systems can make them vulnerable to security risks if not handled securely. Especially if you rely on agent-based RAG rather than modular RAG, data security risks may become worse, as this approach allows agents to dynamically perform certain tasks that involve sensitive data handling. This may put your company at risk, raising privacy and compliance concerns.

Latency

Combining additional steps (e.g., hybrid search, reranking, or agent orchestration) into a single RAG pipeline can slow down response time. Additionally, this also increases API and infrastructure costs and compute power.

How Designveloper Helps Your Business Integrate RAG Effectively

Designveloper is Vietnam’s leading company in web, software, and AI development. Our excellent team of 100+ developers, designers, AI specialists, and other professionals has 12 years of experience in building optimal solutions for clients across industries.

We have extensive technical expertise across 50+ modern technologies, covering emerging innovations, like LangChain, CrewAI, or AutoGen, to build RAG-powered systems that connect LLMs seamlessly with enterprise databases. These products integrate advanced features, from memory for multi-turn conversations to API connectivity for live data retrieval, to increase user experiences and bring financial impact for our clients.

With our proven Agile approaches (SCRUM, Kanban) and dedication to excellence, we’ve completed 200+ successful projects, typically LuminPDF, ODC, and Chloe Ting Hub. Our work has received high appreciation and an impressive 4.9 rating on Clutch. Whether you need an AI chatbot or enterprise-grade automation software, Designveloper has the right expertise and tools to deliver. Contact us now and discuss your idea further!

FAQs:

What advanced RAG algorithms should I know?

There are various advanced RAG algorithms to consider, depending on your specific use case. Some of them include:

- Re-ranking RAG: After receiving the retrieved documents through vector similarity, a secondary model (often a cross-encoder like CohereReranker or sentence-transformer) reorders results to prioritize the most relevant content.

- Fusion-in-Decoder (FiD)/Late Fusion: All the retrieved chunks are not fused into one prompt. Instead, each extracted chunk is encoded separately for the decoder to examine during generation. This prevents context overflow and avoids diluting answers.

- Query Decomposition & Multi-hop Retrieval: Complex queries are split into sub-queries (query decomposition). Each sub-query goes through a retrieval step, and the results are merged together (multi-hop reasoning).

- Contextual Query Routing: Uses an agentic router to decide which indices to query and what to do next (e.g., summarizing or performing multi-step retrieval).

- Feedback-Augmented RAG/Self-RAG: The model self-evaluates its retrieved context and requires extra retrieval if needed.

- Memory-Augmented RAG: Add a long-term memory layer to improve retrieval.

Is advanced RAG more secure than traditional RAG?

No, advanced RAG is not inherently safer than traditional RAG. The security of a certain RAG system depends greatly on specific security measures your team applies, not just whether it’s simple or advanced. Further, advanced techniques are added to the RAG pipeline just to enhance the accuracy and relevance of LLM-generated responses, rather than improving security. Due to the complex components added, an advanced RAG system may be more vulnerable than a traditional one if not properly protected.

Therefore, whether you plan to develop a simple or advanced RAG solution, pay more attention to security best practices and measures to prevent risks, like prompt hijacking, LLM log leaks, or RAG poisoning.

How do modular and agent-based RAG compare?

Modular and agent-based RAG are two different architectural approaches that retrieve information and reason through context differently.

In particular, modular RAG is a pipeline-based framework that includes a fixed sequence of multiple changeable components (e.g., data sources, retrievers, embedding models, or vector stores). This architecture is predictable and deterministic, which means the system generates the output based on the given input. Because of this, developers can easily track errors and adjust the system’s performance stage by stage.

Meanwhile, agent-based RAG, or agentic RAG, is a flexible approach in which the LLM acts as an independent agent. The LLM (often via tools like LangChain agents or function calling) identifies when, how, and from where to fetch information. Further, it decides which retrievers, APIs, or reasoning methods to dynamically use during generation. For instance, it can reformulate a query or call a finance-specific retriever. For this reason, agent-based RAG is considered non-deterministic and unpredictable, making debugging and optimization harder.

How can context coherence be maintained in large-scale RAG?

When your RAG system handles multiple documents and retrievers at once, it might risk losing consistency in context. In other words, the generated responses can be contradictory, off-topic, and fragmented. To maintain context coherence in large-scale RAG, you should:

- Use indexing & chunking strategies (e.g., contextual chunking, metadata & overlapping)

- Adopt advanced retrieval techniques (e.g., multi-pass search, dynamic thresholds)

- Improve the prompt with context and examples

- Dynamically update context in multi-turn conversations.

What are the trade-offs between late chunking and contextual retrieval?

Contextual retrieval and late chunking are two common approaches in advanced RAG. The former uses an LLM to enrich each chunk with extra information, hence offering nuanced context but increasing compute power and efficiency. Meanwhile, the latter prioritizes computational efficiency and simplicity by converting the entire document into embeddings first and then breaking down the vectors. Consider the desired balance between performance, precision, and cost to choose the right approach.

Your ability to distill complex concepts into digestible nuggets of wisdom is truly remarkable. I always come away from your blog feeling enlightened and inspired. Keep up the phenomenal work!

Your blog is a treasure trove of valuable insights and thought-provoking commentary. Your dedication to your craft is evident in every word you write. Keep up the fantastic work!