Chatbots have long been used as powerful assistants to customer/employee support. Along with tech advancements, especially in AI, the demand for smarter chatbots has accordingly increased. People are no longer satisfied with digital assistants that just give instant answers but forget all the content when the chat ends. They’re looking for AI-powered chatbots that can perform multi-turn conversations without the need to repeat every detail of past interactions. This idea sparked the birth of an AI chatbot with memory. So, what is this type of chatbot, exactly? How can you develop an intelligent assistant with long-term memory? Keep reading and find the answers in today’s article!

What Is an AI Chatbot with Memory?

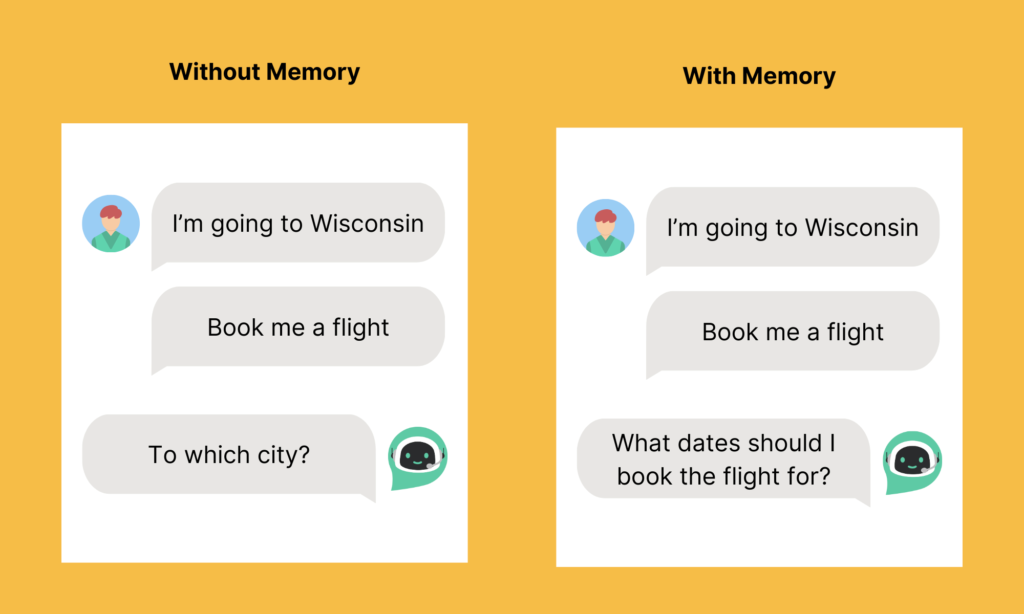

An AI chatbot with memory is an advanced version of traditional AI bots. It not only understands your natural language queries and offers instant responses. Beyond these fundamental capabilities, it stores and recalls the key information (e.g., names, preferences, or previous questions) from previous interactions without users repeating every detail.

Why Memory Matters in Conversational AI?

What if you come back to a cafe the second time and the staff still remembers your favorite drink? That definitely leaves a positive impression on that cafe, making you come back again and again. The same also applies if conversational AI chatbots are able to recall and utilize previous user inputs.

We’re witnessing the rise of AI chatbots these years, with a compound annual growth rate of 31.1%. However, we predict that the bots powered by memory will become much more popular. And here’s why:

- Perform multi-turn conversations. By recalling past messages, AI chatbots can maintain the context and flow across exchanges. This is very beneficial for situations where the conversational AI handles follow-up questions or guides you through multiple steps to achieve certain goals. For example, You: “What is the earliest flight from Chicago to Madison on August 27?” Flight Bot: “The earliest flight departs at 8.12 am. Would you like me to offer flight details?” You: “No. How about the next day?” Without memory, the flight chatbot in this example hardly understands what your follow-up question is about.

- Offer personalized experiences. Storing and recalling past interactions allows AI chatbots to tailor their responses to fit your query, profiles, and preferences. For example, an eCommerce chatbot can remember what products customers cared about in previous sessions and use this information to offer suitable products even when they start a new conversation. This boosts their experiences and engages customers further down the sales funnel.

- Enable task continuity. AI chatbots make conversations continuously connected, even when interruptions occur. They can recover their interactions with users seamlessly by storing, recalling, and using previous exchanges.

- Enhance efficiency. AI chatbots use stored information to make user interactions continuously seamless, stopping users from repeating their issues or personal information. This reduces dissatisfaction and boosts operational efficiency.

5 Types of Chatbot Memory

Not all conversational AI assistants use the same types of memory. Below are five types of chatbot memory you should know before building a memory-based AI chatbot:

Short-Term Memory (Context Window)

The short-term memory of an AI chatbot refers to its ability to recall all the text within a single conversation, but such text still fits inside the LLM model’s context window. What does this mean? Let us explain!

First, a bot’s short-term memory is session-based. It can store and remember the context within the current conversation. This allows the AI bot to understand incomplete sentences, follow-up questions, and references without users repeating each detail. When the chat ends, the memory is erased. If you start a new conversation, you have to provide all the information… again!

For example, you tell a flight bot, “I plan to travel from Chicago to Madison.” Later, say in the same chat, “Find me the cheapest flight on August 27.” Even when you don’t mention the departure and arrival locations, the bot still understands your incomplete sentence thanks to its short-term memory. But when you turn off the chat and come back tomorrow, the bot will forget everything, and as a result, you have to repeat every detail once again.

Second, a bot’s short-term memory is limited to the model’s context window. The context window refers to the amount of text (measured in tokens) the model can process at once. Meanwhile, a token here can be a word, part of a word, or a punctuation mark. In the example above, the sentence “I plan to travel from Chicago to Madison.” includes 9 tokens. Each LLM model has different context window limits; for instance, GPT-4o has the maximum context window of 128K tokens. So, when you reach that limit, even in the same chat, some problems may occur as follows:

- Older parts of the chat fall out of the context window, making the chatbot forget earlier messages. This can lead to a lack of coherence in the conversation.

- When some details are lost, the chatbot can try to guess the missing context. This problem is often known as “hallucination.” However, this guessing may result in incorrect and irrelevant responses to a user’s query.

Long-Term Memory

The long-term memory of an AI chatbot refers to its ability to store past information and fetch it for future chat sessions. It’s also known as “persistent memory,” which lasts beyond a single conversation and remains available until it’s deleted or updated. Like human beings, the process of memorizing past data or interactions for the long term covers three stages:

- Identifying key information or summarizing conversations: AI chatbots capture crucial information from user messages (e.g., names) or condense long conversations into concise summaries to save storage or boost retrieval speed.

- Indexing data: Data is organized and stored in memory stores. Such memory can be:

- in-memory stores (e.g., Redis) for fast, temporary short-term context.

- databases (SQL, NoSQL) for structured data.

- vector databases (e.g., Milvus, Pinecone) for embedding conversation history and performing semantic search later.

- Retrieving and using the most relevant data: Using RAG (Retrieval-Augmented Generation) technology, AI chatbots can extract relevant data from memory stores based on a user message. The context is then fed into the LLM Large Language Model) prompt to generate a contextually aware, personalized, and coherent response.

Compared with short-term memory, persistent memory brings more benefits. First, it allows users to continue their tasks where they left off without repeating everything. Second, the AI chatbot can offer long-term personalization over responses by recalling and utilizing a user’s profiles, habits, and historical exchanges. Third, AI chatbots with long-term memory can handle complex, ongoing tasks (e.g., project planning or therapy) more seamlessly, which require progress over time. Finally, accumulated memory data can uncover trends among customers. This provides your business with actionable insights to improve services and enhance workflows.

Contextual Memory

The contextual memory of an AI chatbot refers to its ability to selectively store pieces of information relevant to a particular topic or conversation thread you recently talked about. It doesn’t necessarily remember everything forever. Instead, it only recalls the context and information that makes the conversation flow naturally.

For example, you ask a chatbot about “the earliest flight from Chicago to Madison on August 27.” The chatbot will remember every information related to a flight, like your departure, destination, date, and budget, while you’re still in the same chat session. If you switch topics, like “find me the best hotel in New York,” the bot will reset its memory by switching to different stored information.

Imagine you then say, “Find me a flight on American Airlines.” The bot will use keywords in your query (“flight”) to determine which conversation thread you’re mentioning and then switch back to the previous topic (about flight). This capability allows the chatbot with contextual memory to jump between different conversation threads without losing track of relevant details for each.

Contextual memory is sometimes confused with short-term memory. The key difference between them lies in how they keep tokens. Short-term memory, in particular, keeps all current tokens in the context window, regardless of your topic. Meanwhile, contextual memory retains only the necessary details for a particular topic, making your chat session more targeted and efficient.

Episodic Memory

The human brain is capable of recalling experiences related to a specific context, time, and place. This is often known as ‘episodic memory’. In AI, this type of memory allows chatbots to index and recall specific events (“episodes”) from historical interactions. These episodes often include the full conversation or important details of a specific event (e.g., a complaint or an appointment).

So, how does episodic memory work in AI? Each episode is stored as a package of data, covering a conversation’s content, metadata (like user ID or intent), timestamps, and relevant context. When a user gives a query, the chatbot will remember the full episode or parts of it related to the question to make the conversation flow naturally. Unlike long-term memory, episodic memory organizes information narratively.

Imagine you’re talking to a chatbot about flight booking.

Day 1:

You: “I booked a flight to Madison on August 27th.”

The chatbot will save this as an episode – Flight booking: Madison, date: August 27th.

Day 10:

You: “Can you remind me when my Madison flight is?”

The chatbot will remember that specific episode and say, “Your flight to Madison is on August 27th.”

Neural Memory Networks

A neural memory network is an advanced architecture that combines neural networks and an external memory matrix to help AI models store and extract information in a more structured way. Some examples of neural memory networks are Memory-Augmented Neural Networks (MANNs) or Neural Turing Machines.

So, how does a neural memory network work? Imagine it as an intelligent assistant with a notebook in which it can write (“store”) key details and read from (“retrieve”) when needed.

- A neural controller acts as the assistant’s brain, which decides what should be written down in the notebook (“matrix”) and when to look things up. In AI, the neural controller is often made of models like Transformers or Long Short-Term Memory networks.

- An external memory matrix acts as the assistant’s notebook. It’s actually a large table with many “slots,” each of which contains a piece of key information and is well-organized for easy retrieval.

So, when the AI model learns important information (e.g., a key fact or an image detail), the neural controller will tell it to store that information in the external memory matrix. If the AI needs a specific piece of information, the neural controller will retrieve it.

Technologies for Building Chatbots with Memory

Typical technologies used to build an AI chatbot with long-term memory cover tools for front-end and back-end development, like Python, JavaScript, and its frameworks (e.g., React).

Further, AI chatbot developers also leverage LangChain, an open, reusable framework to build and connect different components of a chatbot system. LangChain offers a variety of available components, like chat models, retrievers, document loaders, embedding models, vector stores, and other toolkits (i.e., for code interpretation or web browsing).

These components also allow AI chatbots to embed external knowledge sources or queries, retrieve the most relevant info, and generate contextually aware responses. These techniques, often known as RAG (Retrieval-Augmented Generation), Embedding, and Generation, are essential to help your chatbots store, recall, and use the necessary information when needed.

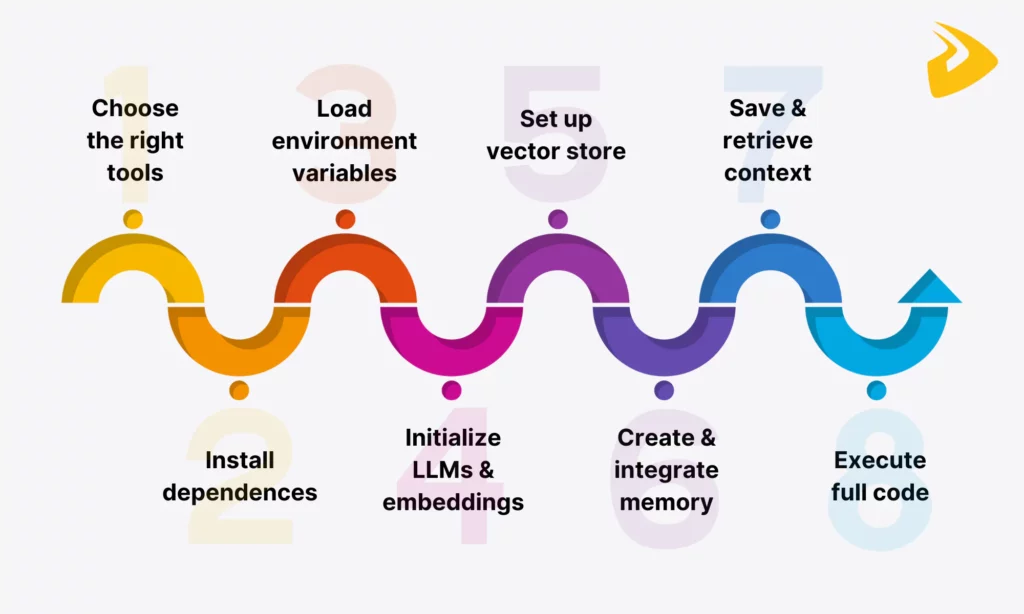

Building a Chatbot with Long-Term Memory

Once you understand which technologies are necessary to build an AI chatbot with long-term memory, it’s time to enter the real game. In this section, we’ll guide you to build such a conversational bot:

Step 1: Choosing the right tools and frameworks

Before writing any code, you have to choose the right tech stack to handle both language understanding and memory management. Here are some suggestions for AI chatbot development:

- LangChain: As we mentioned, LangChain is an open-source, yet powerful framework that offers reusable components to build LLM applications. As LangChain

- Milvus: This is a popular open-source vector database that saves conversation history as searchable vectors and enables faster semantic search. You can also choose Milvus Lite, a lightweight version that can run locally on your devices. Milvus Lite reuses the core elements of Milvus for vector indexing and query parsing, while eliminating components for high scalability in distributed systems. This capability makes it more compact and effective, especially when running in environments with limited computing resources.

- OpenAI’s GPT Models: OpenAI’s LLM versions, like GPT-4o, work with vector databases and retrievers to generate contextually relevant responses to a user’s query.

- Python: LangChain is written in Python (and JavaScript), so this programming language is a great option if you work with LangChain. Its packages and libraries support AI chatbot development. Typically:

- Dotenv, a Python package, is used to load environment variables from a `.env` file and manage API keys or configuration values.

- Pymilvus, a Python SDK, is used to interact with Milvus.

- OpenAI API SDK is a Python client library used to connect to OpenAI’s API for sending prompts and receiving responses from LLMs.

Step 2: Install and set up dependencies

First, make sure Python 3.9+ is installed. Then, create a virtual environment and install the required packages:

# Create and activate virtual environmentpython3 -m venv chatbot_envsource chatbot_env/bin/activate # macOS/Linuxchatbot_env\Scripts\activate # Windows# Install dependenciespip install langchain-openai langchain-community python-dotenv pymilvusAlso, ensure Milvus is running before you start coding. If you haven’t installed Milvus, the easiest way is to run it in Docker.

# Pull and run Milvus in standalone modedocker run -p 19530:19530 milvusdb/milvus:latestStep 3: Load environment variables

We’ll keep API keys safe by storing them in a .env file:

# .env fileOPENAI_API_KEY=your_openai_api_keyMILVUS_HOST=localhostMILVUS_PORT=19530Then, load them in Python using dotenv:

from dotenv import load_dotenvimport osload_dotenv()OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")MILVUS_HOST = os.getenv("MILVUS_HOST")MILVUS_PORT = os.getenv("MILVUS_PORT")Step 4: Initialize the LLM and embeddings

We’ll use OpenAI’s GPT model and the OpenAIEmbeddings class from LangChain to convert text into vectors for Milvus.

from langchain_openai import ChatOpenAI, OpenAIEmbeddingsllm = ChatOpenAI( model="gpt-3.5-turbo", api_key=OPENAI_API_KEY, temperature=0.7)embeddings = OpenAIEmbeddings( model="text-embedding-ada-002", api_key=OPENAI_API_KEY)Step 5: Set up the vector store (Milvus)

Milvus will store embeddings for persistent memory. We’ll connect it to LangChain:

from langchain_community.vectorstores import Milvusvectorstore = Milvus( embedding_function=embeddings, collection_name="chatbot_memory", connection_args={"host": MILVUS_HOST, "port": MILVUS_PORT})Step 6: Create and integrate memory into the agent

For long-term memory, we’ll use LangChain’s VectorStoreRetrieverMemory to help the chatbot store and recall context.

from langchain.memory import VectorStoreRetrieverMemoryretriever = vectorstore.as_retriever(search_kwargs={"k": 3})memory = VectorStoreRetrieverMemory(retriever=retriever)Step 7: Save and retrieve context during conversations

We’ll use LangChain’s built-in memory API to save and retrieve chat history automatically.

# Retrieve relevant past contextcontext_text = memory.load_memory_variables({"input": user_input})["history"]# Generate the prompt with contextfull_prompt = f"Context: {context_text}\nUser: {user_input}\nBot:"bot_response = llm.invoke(full_prompt).content# Save the new conversation into memorymemory.save_context({"input": user_input}, {"output": bot_response})Step 8: Full code walkthrough and execution

Here’s the complete example in one file:

from dotenv import load_dotenvimport osfrom langchain_openai import ChatOpenAI, OpenAIEmbeddingsfrom langchain_community.vectorstores import Milvusfrom langchain.memory import VectorStoreRetrieverMemory# Step 1: Load environment variablesload_dotenv()OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")MILVUS_HOST = os.getenv("MILVUS_HOST")MILVUS_PORT = os.getenv("MILVUS_PORT")# Step 2: Initialize LLM and embeddingsllm = ChatOpenAI( model="gpt-3.5-turbo", api_key=OPENAI_API_KEY, temperature=0.7)embeddings = OpenAIEmbeddings( model="text-embedding-ada-002", api_key=OPENAI_API_KEY)# Step 3: Connect to Milvus vector storevectorstore = Milvus( embedding_function=embeddings, collection_name="chatbot_memory", connection_args={"host": MILVUS_HOST, "port": MILVUS_PORT})# Step 4: Set up memoryretriever = vectorstore.as_retriever(search_kwargs={"k": 3})memory = VectorStoreRetrieverMemory(retriever=retriever)# Step 5: Chat loopprint("Chatbot with Long-Term Memory (type 'exit' to quit)")while True: user_input = input("You: ") if user_input.lower() == "exit": break # Retrieve relevant past context context_text = memory.load_memory_variables({"input": user_input})["history"] # Generate response full_prompt = f"Context: {context_text}\nUser: {user_input}\nBot:" bot_response = llm.invoke(full_prompt).content print(f"Bot: {bot_response}") # Save new exchange into memory memory.save_context({"input": user_input}, {"output": bot_response})Challenges in Implementing Chatbot Memory

AI chatbots with persistent memory offer personalized experiences and enable users to resume interrupted tasks through multi-turn conversations. However, they still present several challenges you should consider before building memory-enabled AI chatbots:

- Data Privacy and Security: Long-term memory means storing user interactions or facts for a long time. The stored data may include personal information, business confidential data, or sensitive information. If it’s incorrectly stored, retrieved, and utilized, your business may encounter severe consequences, like data leaks, unauthorized access, and compliance violations.

- Solutions: anonymize sensitive data, encrypt stored data, comply with data protection regulations, and limit retention time.

- Scalability: When your chatbot interacts with more users, it means the stored data volume will increase accordingly. This can reduce search performance in vector databases, like Milvus, if indexing, sharding, and storage are incorrectly optimized. As a result, the chatbot may respond to queries more slowly and even confront database bottlenecks.

- Solutions: use distributed vector stores or databases, optimize indexing and storage, summarize or prune older data.

- Cost and Complexity: An AI chatbot with long-term memory often costs your company a lot in different services, such as an LLM API, cloud storage, or a vector database. The infrastructure cost may increase along with system complexity when the chatbot has to handle an increasing volume of user interactions.

- Solutions: optimize embedding sizes, cache output, use batch processing, and manage usage frequently.

- Incorrect Stored Info: If the stored information is incorrect, your AI chatbot can generate incorrect or misleading responses. These responses may contain outdated information or hallucinated facts.

- Solutions: verify retrieved information before use, set a confidence threshold, and include a feedback or review mechanism to eliminate incorrect data.

How Designveloper Helps Build a Custom AI Chatbot With Memory

Are your in-house development team struggling to build a conversational AI bot with persistent memory? Do you want to find a trusted, experienced partner to handle AI chatbot development effectively? If so, Designveloper is a perfect partner for your project.

Our excellent team of developers has deep technical skills, typically Python, JavaScript, and its frameworks (e.g., React) to develop tailored, scalable chatbots. These conversational solutions are also integrated with crucial techniques, like RAG, Embedding, and Function Calling, to interact seamlessly with external tools and retrieve the most relevant information for contextually aware responses.

We also master emerging tools (e.g., Langflow, AutoGen, and CrewAI) to incorporate the right LLMs and deploy secure architectures to turn AI ideas into working solutions. Further, we leverage LangChain and popular vector databases (e.g., Milvus or Pinecone) to create and connect different components of an AI chatbot, allowing it to effectively index and retrieve data necessary for a user’s query.

The mastery of these cutting-edge technologies has contributed to our success in various AI chatbot projects. For example, we have developed customer service bots that automate support ticket triage by integrating LangChain and OpenAI. Besides, we also build Rasa-powered chatbots that offer complete data privacy to our clients or integrate AI features into enterprise apps through Microsoft’s Semantic Kernel. Our solutions help clients to attract more potential customers, increase customer satisfaction, and receive positive feedback.

Don’t hesitate to share your difficulty with us! Let us help you make your AI idea come true! Contact our sales reps now to receive detailed consultation.