Figure 4: Results when using ellmer to query a ragnar store in the console.

The my_chat$chat() runs the chat object’s chat method and returns results to your console. If you want a web chatbot interface instead, you can run ellmer‘s live_browser() function on your chat object, which can be handy if you want to ask multiple questions: live_browser(my_chat).

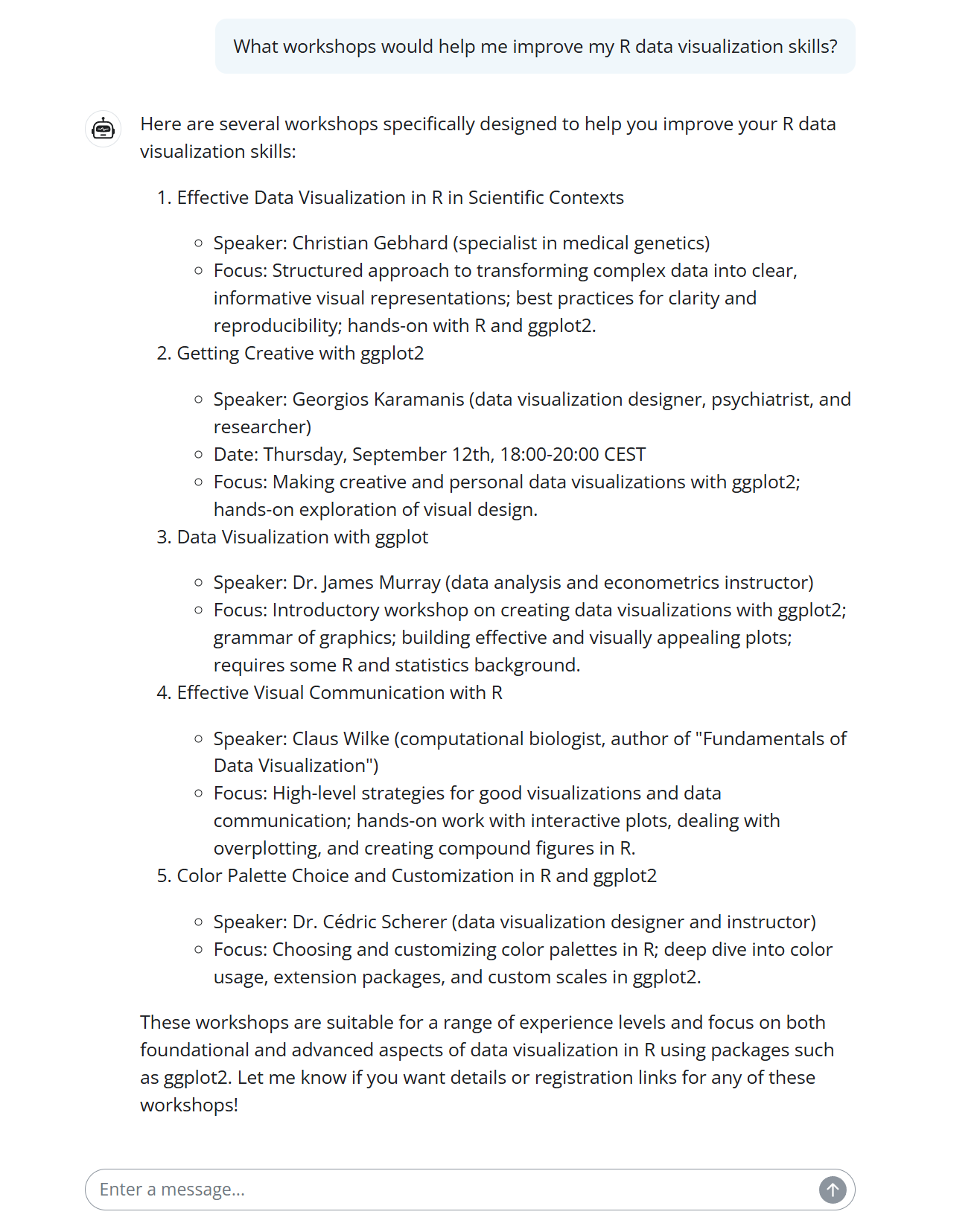

Figure 5: Results in ellmer’s built-in simple web chatbot interface.

Basic RAG worked pretty well when I asked about topics, but not for questions involving time. Asking about workshops “next month”–even when I told the LLM the current date–didn’t return the correct workshops.

That’s because this basic RAG is just looking for text that’s most similar to a question. If you ask “What R data visualization events are happening next month?”, you might end up with a workshop in three months. Basic semantic search often misses required elements, which is why we have metadata filtering.

Metadata filtering “knows” what is essential to a query–at least if you’ve set it up that way. This type of filtering lets you specify that chunks must match certain requirements, such as a date range, and then performs semantic search only on those chunks. The items that don’t match your must-haves won’t be included.

To turn basic ragnar RAG code into a RAG app with metadata filtering, you need to add metadata as separate columns in your ragnar data store and make sure an LLM knows how and when to use that information.

For this example, we’ll need to do the following:

- Get the date of each workshop and add it as a column to the original text chunks.

- Create a data store that includes a date column.

- Create a custom

ragnarretrieval tool that tells the LLM how to filter for dates if the user’s query includes a time component.

Let’s get to it!

Step 1: Add the new metadata

If you’re lucky, your data already has the metadata you want in a structured format. Alas, no such luck here, since the Workshops for Ukraine listings are HTML text. How can we get the date of each future workshop?

It’s possible to do some metadata parsing with regular expressions. But if you’re interested in using generative AI with R, it’s worth knowing how to ask LLMs to extract structured data. Let’s take a quick detour for that.

We can request structured data with ellmer‘s parallel_chat_structured() in three steps:

- Define the structure we want.

- Create prompts.

- Send those prompts to an LLM.

We can extract the workshop title with a regex—an easy task since all the titles start with ### and end with a line break:

ukraine_chunks <- ukraine_chunks |>

mutate(title = str_extract(text, "^### (.+)\n", 1))

Define the desired structure

The first thing we’ll do is define the metadata structure we want an LLM to return for each workshop item. Most important is the date, which will be flagged as not required since past workshops didn’t include them. ragnar creator Tomasz Kalinowski suggests we also include the speaker and speaker affiliation, which seems useful. We can save the resulting metadata structure as an ellmer “TypeObject” template:

type_workshop_metadata <- type_object(

date = type_string(

paste(

"Date in yyyy-mm-dd format if it's an upcoming workshop, otherwise an empty string."

)

),

speaker_name = type_string(),

speaker_affiliations = type_string(

"comma seperated listing of current and former affiliations listed in reverse chronological order"

)

)

Create prompts to request that structured data

The code below uses ellmer‘s interpolate() function to create a vector of prompts using that template, one for each text chunk:

prompts <- interpolate(

"Extract the data for the workshops mentioned in the text below.

Include the Date ONLY if it is a future workshop with a specific date (today is {{Sys.Date()}}). The Date must be in yyyy-mm-dd format.

If the year is not included in the date, start by assuming the workshop is in the next 12 months and set the year accordingly.

Next, find the day of week mentioned in the text and make sure the day-date combination exists! For example, if a workshop says 'Thursday, August 30' and you set the date to 2025-08-30, check if 2025-08-30 is on Thursday. If it isn't, set the date to null.

{{ ukraine_chunks$text }}

"

)

Send all the prompts to an LLM

This next bit of code creates a chat object and then uses parallel_chat_structured() to run all the prompts. The chat and prompts vector are required arguments. In this case, I also dialed back the default numbers of active requests and requests per minute with the max_active and rpm arguments so I didn’t hit my API limits (which often happens on my OpenAI account at the defaults):

chat <- ellmer::chat_openai(model = "gpt-4.1")

extracted <- parallel_chat_structured(

chat = chat,

prompts = prompts,

max_active = 4,

rpm = 100,

type = type_workshop_metadata

)

Finally, we add the extracted results to the ukraine_chunks data frame and save those results. That way, we won’t need to re-run all the code later if we need this data again:

ukraine_chunks <- ukraine_chunks |>

mutate(!!!extracted,

date = as.Date(date))

rio::export(ukraine_chunks, "ukraine_workshop_data_results.parquet")

If you’re unfamiliar with the splice operator (!!! in the above code), it’s unpacking individual columns in the extracted data frame and adding them as new columns to ukraine_chunks via the mutate() function.

The ukraine_chunks data frame now has the columns start, end, context, text, title, date, speaker_name, and speaker_affiliations.

I still ended up with a few old dates in my data. Since this tutorial’s main focus is RAG and not optimizing data extraction, I’ll call this good enough. As long as the LLM figured out that a workshop on “Thursday, September 12” wasn’t this year, we can delete past dates the old-fashioned way:

ukraine_chunks <- ukraine_chunks |>

mutate(date = if_else(date >= Sys.Date(), date, NA))

We’ve got the metadata we need, structured how we want it. The next step is to set up the data store.

Step 2: Set up the data store with metadata columns

We want the ragnar data store to have columns for title, date, speaker_name, and speaker_affiliations, in addition to the defaults.

To add extra columns to a version data store, you first create an empty data frame with the extra columns you want, and then use that data frame as an argument when creating the store. This process is simpler than it sounds, as you can see below:

my_extra_columns <- data.frame(

title = character(),

date = as.Date(character()),

speaker_name = character(),

speaker_affiliations = character()

)

store_file_location <- "ukraine_workshop_w_metadata.duckdb"

store <- ragnar_store_create(

store_file_location,

embed = \(x) ragnar::embed_openai(x, model = "text-embedding-3-small"),

# overwrite = TRUE,

extra_cols = my_extra_columns

)

Inserting text chunks from the metadata-augmented data frame into a ragnar data store is the same as before, using ragnar_store_insert() and ragnar_store_build_index():

ragnar_store_insert(store, ukraine_chunks)

ragnar_store_build_index(store)

If you’re trying to update existing items in a store instead of inserting new ones, you can use ragnar_store_update(). That should check the hash to see if the entry exists and whether it has been changed.

Step 3: Create a custom ragnar retrieval tool

As far as I know, you need to register a custom tool with ellmer when doing metadata filtering instead of using ragnar‘s simple ragnar_register_tool_retrieve(). You can do this by:

- Creating an R function

- Turning that function into a tool definition

- Registering the tool with a chat object’s

register_tool()method

First, you will write a conventional R function. The function below adds filtering if a start and/or end date are not NULL, and then performs chunk retrieval. It requires a store to be in your global environment—don’t use store as an argument in this function; it won’t work.

This function first sets up a filter expression, depending on whether dates are specified, and then adds the filter expression as an argument to a ragnar retrieval function. Adding filtering to ragnar_retrieve() functions is a new feature as of this writing in July 2025.

Below is the function largely suggested by Tomasz Kalinowski. Here we’re using ragnar_retrieve() to get both conventional and semantic search, instead of just VSS searching. I added “data-related” as the default query so the function can also handle time-related questions without a topic:

retrieve_workshops_filtered <- function(

query = "data-related",

start_date = NULL,

end_date = NULL,

top_k = 8

) {

# Build filter expression based on provided dates

if (!is.null(start_date) && !is.null(end_date)) {

# Both dates provided

start_date <- as.Date(start_date)

end_date <- as.Date(end_date)

filter_expr <- rlang::expr(between(

date,

!!as.Date(start_date),

!!as.Date(end_date)

))

} else if (!is.null(start_date)) {

# Only start date

filter_expr <- rlang::expr(date >= !!as.Date(start_date))

} else if (!is.null(end_date)) {

# Only end date

filter_expr <- rlang::expr(date <= !!as.Date(end_date))

} else {

# no filter

filter_expr <- NULL

}

# Perform retrieval

ragnar_retrieve(

store,

query,

top_k = top_k,

filter = !!filter_expr

) |>

select(title, date, speaker_name, speaker_affiliations, text)

}

Next, create a tool for ellmer based on that function using tool(), which needs the function name and a tool definition as arguments. The definition is important because the LLM uses it to decide whether or not to use the tool to answer a question:

workshop_retrieval_tool <- tool(

retrieve_workshops_filtered,

"Retrieve workshop information based on content query and optional date filtering. Only returns workshops that match both the content query and date constraints.",

query = type_string(

"The search query describing what kind of workshop content you're looking for (e.g., 'data visualization', 'data wrangling')"

),

start_date = type_string(

"Optional start date in YYYY-MM-DD format. Only workshops on or after this date will be returned.",

required = FALSE

),

end_date = type_string(

"Optional end date in YYYY-MM-DD format. Only workshops on or before this date will be returned.",

required = FALSE

),

top_k = type_integer(

"Number of workshops to retrieve (default: 6)",

required = FALSE

)

)

Now create an ellmer chat with a system prompt to help the LLM know when to use the tool. Then register the tool and try it out! My example is below.

my_system_prompt <- paste0(

"You are a helpful assistant who only answers questions about Workshops for Ukraine from provided context. Do not also use your own existing knowledge.",

"Use the retrieve_workshops_filtered tool to search for workshops and workshop information. ",

"When users mention time periods like 'next month', 'this month', 'upcoming', etc., ",

"convert these to specific YYYY-MM-DD date ranges and pass them to the tool. ",

"Past workshops do not have Date entries so would be NULL or NA",

"Today's date is ",

Sys.Date(),

". ",

"If no workshops match the criteria, let the user know."

)

my_chat <- chat_openai(

system_prompt = my_system_prompt,

model = "gpt-4.1",

params = params(temperature = 0.3)

)

# Register the tool

my_chat$register_tool(workshop_retrieval_tool)

# Test it out

my_chat$chat("What R-related workshops are happening next month?")

If there are indeed any R-related workshops next month, you should get the correct answer, thanks to your new advanced RAG app built entirely in R. You can also create a local chatbot interface with live_browser(my_chat).

And, once again, it’s good practice to close your connection when you’re finished with DBI::dbDisconnect(store@con).

That’s it for this demo, but there’s a lot more you can do with R and RAG. Do you want a better interface, or one you can share? This sample R Shiny web app, written primarily by Claude Opus, might give you some ideas.