Kubernetes isn’t complete without smart external traffic management. This blog dives into Ingress Controller architecture, setup best practices, security tips, scaling strategies, and real-time traffic handling with Ecosmob’s SIP-native Ingress solution!

When you think of Kubernetes architecture, you picture fast deployments, resilient services, and elastic scaling.

But none of that matters if traffic can’t enter your cluster reliably, securely, and efficiently.

This is where a solid Kubernetes Ingress Controller architecture becomes critical.

Without a strong ingress layer, your Kubernetes environment faces hidden risks like:

- Load balancer sprawl

- Downtime during traffic spikes

- Weak security at the public edge

- Poor scaling under real-world workloads

In this blog, we’ll break down what Kubernetes Ingress Controller architecture is, how it works, how to set it up for real-world scalability, and help you understand why modern clusters can’t afford to ignore it anymore.

Scaling VoIP or WebRTC apps in Kubernetes? Why not make it effortless?

What Is Kubernetes Ingress Controller Architecture?

At its core, Kubernetes Ingress Controller architecture defines how external requests travel into your cluster and reach backend services.

It acts like a smart reverse proxy that interprets routing rules and forwards traffic efficiently.

The basic traffic flow looks like this:

- A client makes a request to your app’s DNS.

- The request hits a cloud LoadBalancer (optional, but common for production).

- The LoadBalancer sends the traffic to an Ingress Controller pod inside your cluster.

- The Ingress Controller reads Ingress Resources to find matching routing rules.

- It forwards the request to the correct Service → Pods inside your Kubernetes environment.

Instead of exposing every microservice separately, Ingress architecture centralizes external access and enforces security policies at the edge.

Key Components of Kubernetes Ingress Controller Architecture

An effective ingress setup includes several critical components:

- Ingress Controller Pod: The core software (like NGINX or Traefik) that processes external requests and enforces routing policies.

- Ingress Resources: YAML manifests defining how traffic should be routed based on paths, hosts, and other conditions.

- ClusterIP Services: Kubernetes Services that expose backend application Pods internally.

- LoadBalancer: Provides a public-facing static IP address and forwards traffic into the cluster (used mostly in cloud environments).

- TLS Termination Support: Ends HTTPS traffic securely at the ingress layer to reduce backend complexity.

- Authentication and Authorization Modules: Enforces security policies like WAF rules, IP whitelisting, or OAuth-based authentication.

- Observability Layers: Metrics, logs, and tracing to monitor ingress health and performance.

Each of these components play a crucial role in making sure that external access is reliable, secure, and scalable without burdening backend services.

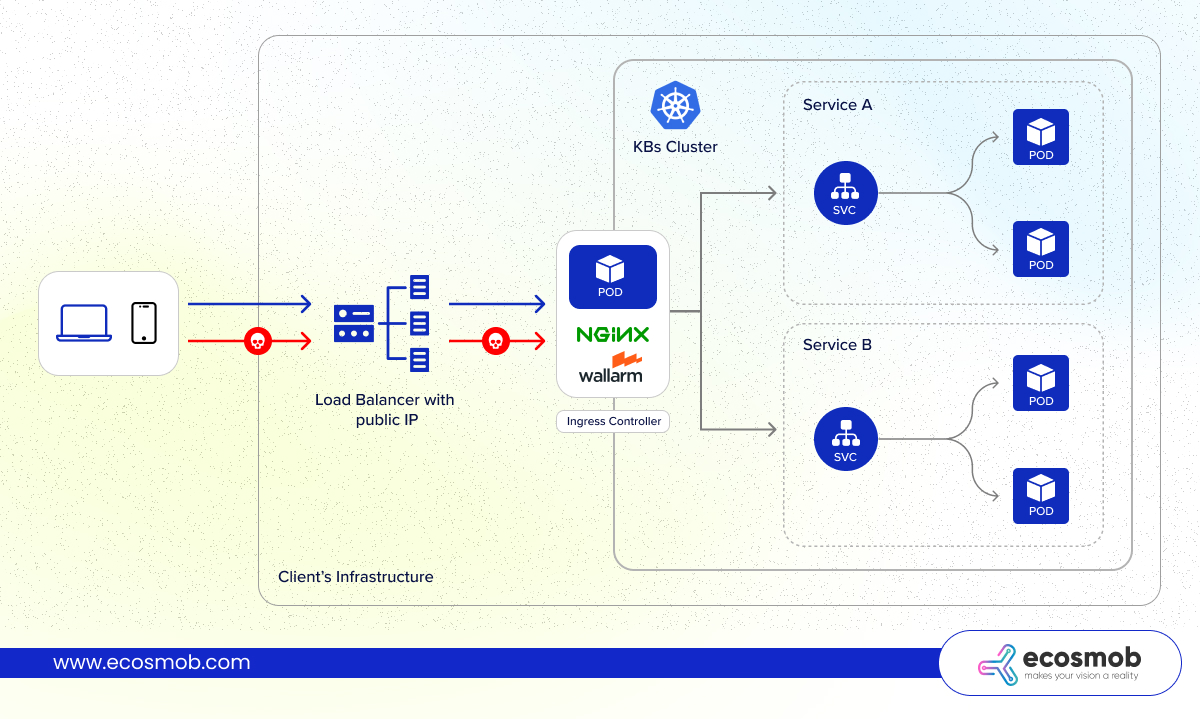

Understanding a Typical Kubernetes Ingress Architecture

Let’s understand how a typical NGINX Ingress architecture looks through a Kubernetes architecture diagram.

Here’s how the traffic flows:

- Users access your application through their browser or mobile device.

- Requests first reach a Load Balancer (e.g., AWS ELB, Azure Load Balancer) with a public IP address.

- The Load Balancer forwards traffic into the cluster to one or more NGINX Ingress Controller Pods.

- The Ingress Controller reads routing rules defined in Ingress Resources.

- Based on the host, path, or headers, it routes traffic to the correct Service, which forwards it to the right Pod(s) running your applications.

This architecture ensures that no matter how many services you add inside the cluster, external access remains clean, controlled, and scalable.

Without it, your services stay locked inside the cluster, invisible to the outside world.

How to Set Up an Ingress Controller in Kubernetes

Here’s how to set up an NGINX Ingress Controller (one of the most popular and reliable options).

1. Add the NGINX ingress Helm repository

helm repo add ingress-nginx

https://kubernetes.github.io/ingress-nginx

helm repo update

2. Install the Ingress Controller

helm install ingress-nginx ingress-nginx/ingress-nginx \

–namespace ingress-nginx \

–create-namespace

3. Verify pod deployment

helm repo add ingress-nginx

4. Retrieve the external IP

kubectl get svc -n ingress-nginx

Further, let’s see a basic ingress Routing YAML:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

– host: myapp.example.com

http:

paths:

– path: /api

pathType: Prefix

backend:

service:

name: api-service

port:

number: 80

– path: /web

pathType: Prefix

backend:

service:

name: frontend-service

This YAML routes /api requests to the API backend, and /web requests to the frontend, all through one Load Balancer and one Ingress Controller.

Ingress Controller Configuration Best Practices

Fine-tuning your Ingress Controller configuration transforms a basic setup into a production-grade system.

Here’s how to do it:

- Enable TLS/SSL: Always enforce HTTPS traffic. Tools like cert-manager can automate Let’s Encrypt certificates for dynamic SSL provisioning.

- Tune Read/Write Timeouts: Prevent hanging or slow connections that could tie up backend resources.

- Set Request Body Size Limits: Block abusive uploads (e.g., with nginx.ingress.kubernetes.io/proxy-body-size).

- Use Rate Limits and Connection Limits: Control how many requests a client can send to prevent simple DDoS attacks.

- Enable Keep-Alive Connections: Reduces the overhead on backend pods and accelerates client communication.

- Use Backend Health Checks: Avoid routing traffic to unhealthy or crashing Pods.

- Activate Logging Properly: Detailed access and error logs give you the visibility needed for monitoring and debugging issues.

Neglecting proper configuration leaves the ingress vulnerable to overload, security risks, and inefficient scaling, making these optimizations non-negotiable in production.

Security Considerations for Kubernetes Ingress Controllers

Ingress Controllers are a prime target because they sit at the edge of your network.

To secure them properly, you can:

- TLS Everywhere: Terminate SSL traffic at the ingress point; never let plain HTTP reach your backends.

- Use Strong SSL/TLS Protocols: Only allow TLS 1.2+ and disable outdated protocols like SSLv3 or TLS 1.0.

- Implement WAF Rules: Deploy a Web Application Firewall to block SQL injections, XSS attacks, and common exploits.

- Enable Rate Limiting and DDoS Protection: Throttle abusive clients before they impact backend services.

- Use IP Whitelisting: Restrict admin panels or critical routes to trusted IPs or VPN ranges.

- Implement Mutual TLS (mTLS): For sensitive apps, require client-side certificates in addition to server certificates.

- Apply Strict RBAC Policies: Lock down who can edit Ingress Resources or modify routing configurations.

Failing to secure ingress properly can expose your entire cluster to data breaches, downtime, and regulatory compliance risks.

Building Scalability Into Your Ingress Controller Setup

A scalable ingress layer ensures your Kubernetes platform can grow without performance bottlenecks.

To achieve scalability:

- Horizontal Pod Autoscaling (HPA): Automatically increase or decrease the number of Ingress Controller pods based on CPU, memory, or custom metrics.

- Multi-AZ and Multi-Region Deployments: Distribute your ingress layer across availability zones or even multiple regions for resilience.

- LoadBalancer Integration: Use cloud-native LoadBalancers that support auto-scaling and multi-zone load balancing.

- Connection Draining for Graceful Shutdowns: Ensure existing connections complete before terminating pods during scaling events.

- Global Traffic Management: Combine Ingress Controllers with Global DNS and Traffic Management services for geo-distributed applications.

With these strategies, your ingress setup remains responsive under load, easily handling sudden traffic spikes, rolling deployments, and infrastructure changes.

Standard Ingress Controllers can’t handle SIP calls because SIP doesn’t use URLs; it uses codecs and transport protocols to route traffic.

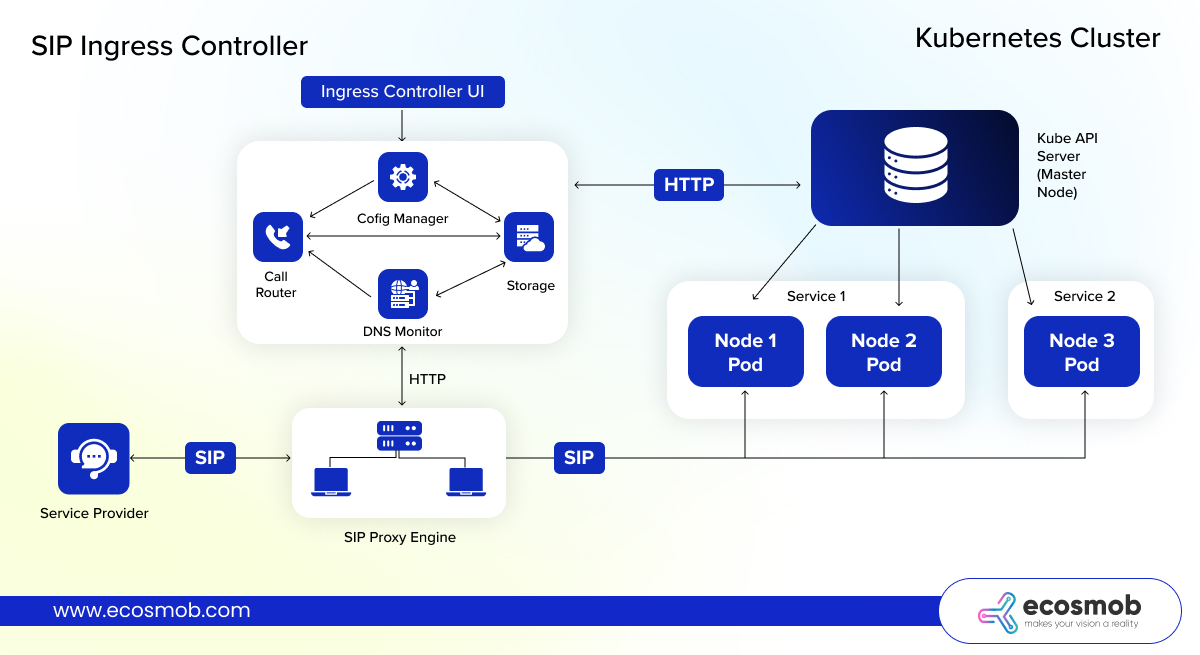

How Ecosmob’s SIP Ingress Controller Redefines Real-Time Traffic Ingress

Real-time communication traffic (like VoIP, SIP sessions, WebRTC streams) cannot be handled like simple HTTP web traffic. While standard Ingress Controllers excel at HTTP/S traffic, they fall short for SIP (Session Initiation Protocol) and real-time communication apps.

And that’s why we built one.

Ecosmob’s SIP Ingress Controller is the first Kubernetes-native solution designed for real-time VoIP workloads.

Here’s what makes it different:

- Real-Time SIP Pod Discovery: Dynamically finds and routes calls to healthy pods based on Kubernetes APIs.

- SIP Header-Based Routing: Routes based on codecs, DIDs, transport protocols (UDP, TCP, TLS) — not just paths or hosts.

- Built-In Session Persistence: Ensures calls stay stable during pod rescheduling or autoscaling.

- TLS and SRTP Support: Encrypts both signaling and media streams.

- Load Balancing and Failover: Smartly distributes traffic and reroutes calls instantly during failures.

For VoIP providers, UCaaS platforms, and WebRTC applications, Ecosmob’s SIP Ingress Controller is THE missing piece for cloud-native real-time services.

For VoIP providers, UCaaS platforms, and WebRTC applications, Ecosmob’s SIP Ingress Controller is THE missing piece for cloud-native real-time services.

Your Kubernetes cluster is only as resilient, secure, and scalable as its ingress layer.

A poorly designed ingress means downtime, frustrated users, and spiraling cloud costs.

A smart ingress architecture means faster deployments, lower costs, stronger security, and real scale.

But you don’t have to do it all by yourself.

Building a VoIP, UCaaS, or real-time communication platform inside Kubernetes?

Need better traffic control, smarter routing, and true resilience?

Talk to our Kubernetes experts today!

Lost in traffic bottlenecks? Let’s build a smarter ingress architecture for your cluster.

FAQs

What’s the difference between Ingress and LoadBalancer services in Kubernetes?

LoadBalancer creates a public IP for a single service, while Ingress uses smart routing to expose multiple services through a single entry point, making it more scalable.

How do you install an NGINX Ingress Controller in Kubernetes?

You can install the NGINX Ingress Controller using Helm charts by adding the ingress-nginx repository, deploying it into a namespace, and configuring external access through a LoadBalancer.

How do Ingress Controllers scale in Kubernetes?

Ingress Controllers scale horizontally using Kubernetes Horizontal Pod Autoscaler (HPA), LoadBalancer integration across zones, and smart connection draining to handle rolling updates smoothly.

What’s different about SIP traffic compared to HTTP traffic in Kubernetes?

SIP traffic involves real-time session negotiation, dynamic port usage, and persistent connections, making it incompatible with standard HTTP-focused Ingress Controllers without customization.

Can multiple Ingress Controllers run in the same Kubernetes cluster?

Yes. Kubernetes supports multiple Ingress Controllers using IngressClass, allowing different protocols (HTTP, SIP, WebSocket) to be routed separately for maximum traffic optimization.