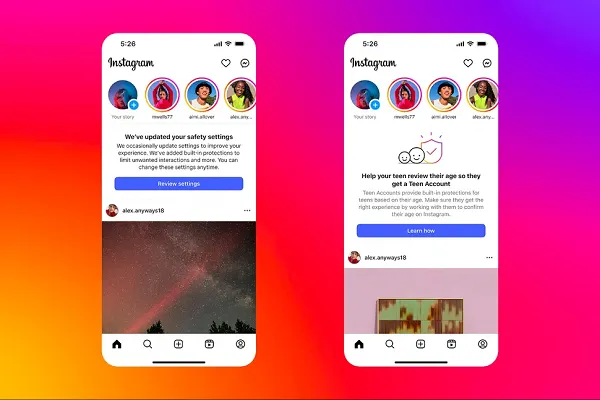

Instagram’s looking to advance its teen protection features, with improved AI detection of account holder age, and an expansion of its teen accounts to users in Canada.

As reported by Android Central, Instagram’s updated user detection process will automatically limit interactions with certain accounts when it determines that the user is under 18, even if the user tries to lie about their age by listing an adult birth date.

It’s the latest deployment of Meta’s advancing age detection systems, which utilize various factors to determine user age, including who follows you, who you follow, what content you interact with, and more. Meta’s systems can also correlate birthday well-wishes from other users to factor into its assessment.

It’s not a perfect system, and Meta has acknowledged that the process will still make some errors (users will have the option to appeal and/or correct their age if they are incorrectly limited). But Meta says that its system is improving, and learning more about the key considerations that are likely to indicate user age via platform usage.

Meta will also now default Canadian teens under the age of 16 into its advanced security mode, which can only be deactivated by a parent, as it has for U.S. teens since last September.

This is an important focus for the app, as more and more regions look to implement new restrictions on social media usage to protect young users.

Over the last year, several European nations, have put their support behind proposed laws to cut off young teens from social media apps entirely, including France, Greece and Denmark. Spain is considering a 16 year-old access restriction, while Australia and New Zealand are also moving to implement their own laws, and Norway is in the process of developing its own regulations.

It seems inevitable that some level of restriction is going to be imposed on teen social media use in many regions, but the challenge then is how do you enforce it, and how do you ensure that you can legally hold platforms to account for upholding their requirements?

Because each platform has its own system for detecting and protecting teens, and some, like these new measures from IG, are more advanced than others. But in a legal sense, enforcement requires standards, and safety benchmarks that can be met by all such approaches.

At present, there’s no universal standard on age detection, and while AI is showing some promise, various other methods are being tested, including selfie verification by third party providers (which poses its own exposure risk).

In Australia, for example, which is moving ahead with its own laws on teen social media access, regulators recently tested 60 different age verification approaches, from a range of vendors, and found that while some options are mostly effective, there will be mistakes, especially for users within two years of 16.

Thus far, the Australian government hasn’t outlined the specific requirements for social platforms in regards to age checking, with the proposed laws only noting that “all reasonable steps” are undertaken to remove the accounts of those aged under 16. As it stands, there won’t be a legally enforceable standard for accuracy unless it can establish a universal checking methodology.

Which is why it’s testing so many options, but again, the challenge of enforcement remains a key impediment, and will likely blunt all potential legal recourse by authorities where such laws are enacted.

Unless agreement can be reached on an agreed standard approach, which is why Meta’s use of AI age verification could eventually establish a new industry threshold for such.

It’s improving its approach, and driving better results, and expanding these systems to more regions is another step in the right direction.