An LLM-powered autonomous agent is an AI system that uses a large language model (LLM) as its core to solve tasks with minimal human intervention. In this guide, the term LLM-powered autonomous agent (often written as llm powered autonomous agent) refers to such systems. In these agents, the LLM serves as the “brain” and is supported by modules for planning, memory, and tool use. The LLM reads an input (like a user question) and then plans a sequence of actions or sub-tasks to achieve a goal. Unlike a simple chatbot, the agent can call external tools (APIs, databases, calculators, etc.) and use stored memory to make decisions.

Many real-world tasks motivate the use of agents. Complex queries often require multiple steps and data sources. For example, a question about nutrition trends and obesity might require the agent to break down the question, search databases for statistics, and even generate charts. A plain LLM or fine-tuned model cannot do all that alone. An LLM agent can plan each step, fetch up-to-date data via tools, and combine the results into an answer. This planning-and-tool approach lets agents handle tasks too involved for a single LLM output.

Motivation for LLM-based Agents

Traditional LLMs are powerful at language, but some problems need more. Complex or dynamic tasks often go beyond what a static model can produce. By layering planning, memory, and tools on an LLM, agents can tackle multi-stage problems. For example, instead of having one model answer a broad query, an agent will decompose the query, query external sources, and iterate until it reaches a solution. This multi-step reasoning is the key motivation: it lets systems answer questions using current data and specialized capabilities that an LLM alone lacks.

Why Not Just Fine-tune?

One approach might be to fine-tune an LLM for each specific task or domain. However, fine-tuning has drawbacks. It requires training and storing separate models for every domain, which multiplies computational cost and storage. It can also make the model forget its original general knowledge. Even after fine-tuning, the model still can’t access real-time data or specialized tools (like calculators or up-to-date databases) without external help. In short, simply fine-tuning does not provide the real-time information or external capabilities needed for many tasks. Agents solve this by adapting to tasks via planning and tool use rather than by altering the model’s weights for every new job.

An Evolution of LLM-based Agents

Designing LLM agents has evolved rapidly. Early systems used a single prompt to get an answer. Then techniques like “chain-of-thought” prompting helped LLMs break tasks into logical steps within one output. Later came retrieval-augmented generation (RAG), where models fetch external data. Today’s autonomous agents go further: they repeatedly plan and act. Modern agents can generate a plan, execute actions (possibly with tools), observe results, and revise the plan as needed. They may even self-reflect, using their outputs to improve over iterations. In effect, agents today integrate multiple problem-solving steps and external resources, making them far more capable than a one-shot LLM prompt.

The Difference between an Autonomous Agent and an LLM

A large language model (LLM) by itself is essentially a text generator. It passively waits for a prompt and produces a response. It cannot act on its own or plan multiple steps. In contrast, an autonomous agent is a system that can carry out a task from start to finish by itself. It can perceive inputs, set goals, plan actions, use tools, and take actions in sequence. For example, IBM explains that agents use LLMs to understand inputs step-by-step and then decide when to call external tools. A standalone LLM, however, would only output the next word of text and have no mechanism to use tools or remember context across multiple steps. In short, an LLM is like a brilliant adviser that answers one question at a time, while an agent is a self-guided executor that can decompose goals and interact with the environment.

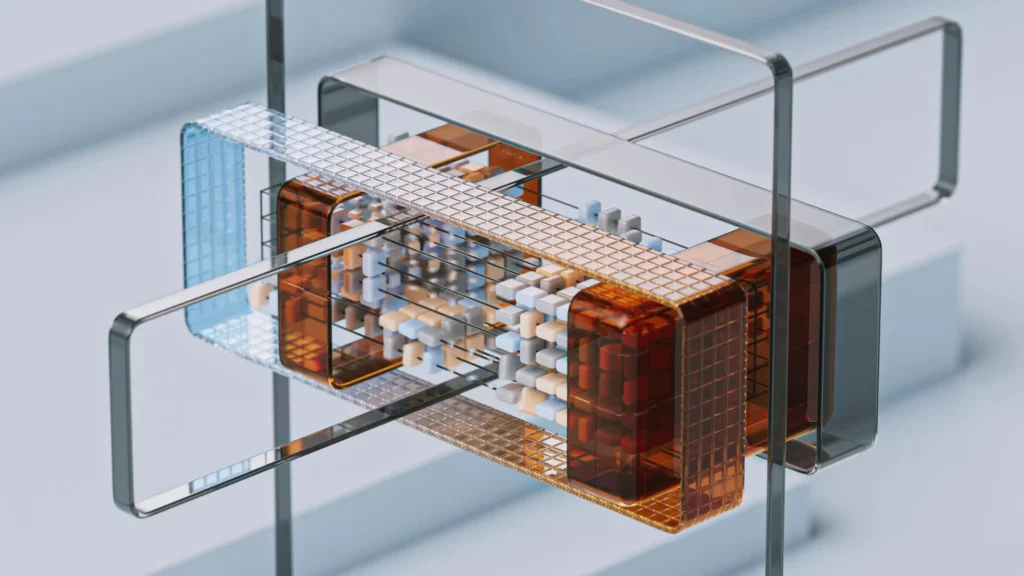

Architecture of LLM-powered Autonomous Agent Systems

LLM agent architectures generally consist of several layers working together. At the core is the agent core (the LLM model) that interprets prompts. It is surrounded by support modules: a planning module to break tasks into steps, a memory module to store context and past results, and tool interfaces that let the agent query databases or call APIs. A user’s query enters the system at the input layer, then the LLM core generates a plan and actions, which may trigger external tools or memory lookups. Finally, the LLM assembles the results into an answer and outputs it.

Key Architectural Layers

- LLM Core (Agent Brain): A large language model that coordinates reasoning. The agent’s prompt typically includes its goals and the tools it can use. The LLM’s output drives the plan and action selection.

- Planning Module: Breaks a complex task into subgoals or steps. It may use prompts or internal logic (like chain-of-thought) to list what needs to be done.

- Memory Module: Keeps track of information over time. Short-term memory holds recent context from the conversation (in the LLM’s context window), and long-term memory uses an external vector store to retain facts or past interactions. Memory lets the agent recall earlier steps or user preferences.

- Tools/External Interfaces: Well-defined APIs or executables (search engines, calculators, databases, etc.) that the agent can invoke. For example, the agent might use a search API or a code interpreter to perform tasks beyond text generation.

- Autonomy Framework: A control layer that manages how the agent runs itself. It might schedule tasks, monitor progress, and handle multi-agent coordination if needed.

- Ethical and Safety Constraints: Rules and filters applied to ensure responsible behavior. For instance, agents include checks to avoid harmful outputs or privacy violations.

Data Flow from Task Input to Evaluated Output

- Input: The agent receives a user request or task.

- Goal Analysis and Planning: The LLM core analyzes the request and creates a plan of sub-tasks.

- Action Loop: For each planned step, the agent decides on an action. This might involve calling an external tool or generating text.

- Tool Execution: The chosen tool or function is executed (e.g., a web search, database query, or code run).

- Observation: The agent receives the result or observation from that tool/action.

- Memory Update: Relevant results or facts are stored in memory for later use.

- Iteration: The agent may refine its remaining plan based on new observations. It can loop back to planning if needed.

- Final Output: Once all steps are completed, the LLM generates a coherent final answer or report using the accumulated information.

This flow allows the agent to combine language reasoning with real-time data and actions, producing an output that has been evaluated step-by-step.

Pseudocode of a Minimal Autonomous Agent

function run_agent(task):

plan = LLM.generate_plan(task)

memory = []

for step in plan:

tool = select_tool_for(step)

result = tool.execute(step)

memory.append(result)

final_answer = LLM.generate_answer(task, plan, memory)

return final_answerCore components of LLM-powered Autonomous Agents

Planning

Planning lets the agent decompose tasks and sequence actions. One common technique is Chain-of-Thought (CoT) prompting, where the model is explicitly asked to “think step by step.” This helps the agent break a problem into simpler steps within its reasoning. Tree of Thoughts goes further by exploring multiple reasoning branches in parallel and selecting the best path. Another approach is LLM+P, where the agent uses a classical planner: the LLM translates the task into a planning problem (e.g. in PDDL), invokes an external planner to get a plan, and then translates that back into natural language steps.

Agents also use self-reflection methods. For example, the ReAct framework interleaves “Thought” and “Action” steps, allowing the agent to act on the environment and then reflect on the observation. Reflexion adds another layer: the agent uses feedback or learned heuristics to revise failed attempts. These planning and reflection techniques enable the agent to adaptively improve its strategy during execution.

Tool Use

Equipping an agent with external tools dramatically expands its capabilities. An LLM can’t natively do arithmetic or fetch live data, but it can call a calculator API, a search engine, a knowledge graph, or other services. Various frameworks illustrate this: MRKL (Modular Reasoning, Knowledge and Language) is a neuro-symbolic system where the LLM routes queries to expert modules (e.g. math or weather) as needed. TALM and Toolformer are approaches that fine-tune LLMs to learn when to call APIs during generation. In practice, products like ChatGPT use plugin systems or function-calling interfaces so that the LLM can invoke real APIs (e.g. for flight booking, news search, or database queries) at runtime. In short, tools let the agent act on tasks that a bare LLM cannot solve alone.

Memory

Agents draw inspiration from human memory systems. In humans, sensory memory captures immediate inputs, short-term memory holds information briefly, and long-term memory retains knowledge over time. In an LLM agent, sensory memory is analogous to the input prompt it just received. Short-term memory corresponds to what fits in the model’s context window (recent dialog or plan steps). Long-term memory is implemented by external storage: for example, the agent writes key facts into a database or vector store and later retrieves them.

Efficient retrieval is crucial. Agents typically encode memories as embeddings in a vector database and use maximum inner product search (MIPS) to quickly find relevant items. Techniques like FAISS or HNSW allow very fast nearest-neighbor lookup, so the agent can recall past information or similar examples instantly. Together, these memory mechanisms allow agents to remember past interactions and information beyond the LLM’s fixed context.

Agents also need an autonomy framework and safety constraints. The autonomy framework is the control layer that ties planning, memory, and tool use together. Ethical and safety constraints are built in (for example, filters or guidelines) to ensure the agent’s actions remain responsible and secure. These components help the agent manage its own workflow and avoid unsafe outcomes.

Top Use Cases of LLM-powered Autonomous Agents

Customer Support and Virtual Assistants

LLM agents shine in customer service roles. They can automatically handle routine inquiries, resolve common problems, and escalate complex issues to human staff. For instance, a retail or banking agent might greet a customer, answer FAQs, check order or account status, and even process standard requests, all via chat or voice. These agents can provide 24/7 personalized support through chatbots or digital assistants, reducing wait times and freeing human agents for harder cases.

Business Operations and Workflow Automation

In enterprises, agents streamline back-office workflows. They can schedule meetings, organize emails, generate reports, and track project progress. For example, an agent might gather sales data, compute key metrics, draft a summary report, and email it to the team. By connecting to calendars, CRM systems, and databases, agents automate repetitive tasks like updating spreadsheets or drafting routine documents. This boosts productivity by letting staff focus on strategy rather than clerical work.

Market Research and Data analysis

Agents are adept at sifting through large datasets and extracting insights. In marketing and finance, an agent can monitor news, analyze social media sentiment, and compile statistical trends. For example, a market-research agent might scan competitor websites, collect sales figures, and forecast future demand, then summarize findings for a marketing team. By automating data gathering and summarization, agents help businesses make data-driven decisions more quickly.

Content Creation and Editing

Content teams use LLM agents to accelerate writing tasks. Agents can draft blog posts, press releases, or social media updates on given topics. They can also edit and proofread text, ensuring it follows a style guide. For instance, an agent might generate a first draft of a product description and then refine it for tone and clarity. By taking over the initial writing and routine editing, agents help human writers produce content faster without losing quality.

Healthcare Assistance

In healthcare, autonomous agents can improve both patient and provider workflows. They can answer patient questions (e.g. symptoms, medication info), schedule appointments, and triage queries. Agents can also assist clinicians by summarizing medical research, transcribing patient notes, or extracting key information from records. For example, an agent might review recent lab results and highlight anomalies for a doctor. By providing such support, agents can help medical staff focus more on patient care.

Education and Training

Educational agents deliver personalized learning experiences. A student can ask an agent to explain a concept or solve a problem, and the agent will adapt explanations to the student’s level. Teachers can use agents to generate quizzes, create lesson plans, or analyze student performance data. For instance, an agent might design practice exercises tailored to a student’s weak points. By automating these tasks, agents enable more interactive and individualized education.

Software Development Assistance

In software engineering, agents serve as coding assistants. Developers use them to generate code snippets from descriptions, find bugs, or document code. An agent might parse a natural-language request like “implement quicksort in Python,” write the code, run tests, and then explain the solution. Agents can also review pull requests or suggest improvements. By automating repetitive coding tasks and error checks, they accelerate development and improve code quality.

Domain-specific Research Agents (ChemCrow, Generative Agents)

Some agents are built for specialized research domains. ChemCrow is a chemistry-focused agent that pairs an LLM with 13 expert tools (e.g. molecular calculators) to plan chemical syntheses and drug discovery. In experiments, ChemCrow solved complex organic chemistry problems more accurately than GPT-4 alone. Another example is Generative Agents (Park et al. 2023), where each agent is a virtual character driven by an LLM with memory and planning. These agents live in a simulated world, interact socially, and develop emergent behaviors like relationships and event planning. Such projects show how agents with memory and tools can perform advanced scientific or social simulations in specific domains.

Challenges in Building and Deploying LLM-powered Autonomous Agents

Understanding Task Scope and Complexity

Defining the agent’s role clearly is hard but critical. LLMs are flexible, but an agent will struggle if it’s given too vague or too broad a task. As one report notes, “overloading an agent with too many functions can lead to performance degradation or unexpected errors”. Agents work best with well-scoped tasks. If an agent is asked to handle an ill-defined project, it may make inconsistent decisions. Careful design is needed to align the agent’s capabilities with the task requirements.

Reliability and Accuracy of Natural Language Interfaces

LLM agents can produce “hallucinations” – outputs that sound plausible but are incorrect. This is a key challenge: when an agent explains its reasoning in natural language, errors may slip in. Ensuring reliability means adding verification or human-in-the-loop checks. For example, agents often use RAG or external data to validate claims. Developers must rigorously test agents and incorporate fact-checking to catch mistakes. Even then, debugging agent behavior can be tricky because its reasoning is opaque in text.

Memory and Context Management

Agents must balance keeping context and avoiding overload. They need to store enough history to handle long tasks, but too much memory can confuse the model. An agent might drop irrelevant details or summarize old information to keep memory manageable. Handling context across sessions or lengthy dialogs is especially hard. Poor memory management can make the agent lose track of user intent or repeat itself. Designing efficient memory schemes is essential for consistency.

Ethics and Safety

Deploying agents raises ethical concerns. If an agent’s training data is biased, it might produce biased decisions. Without safeguards, an agent could inadvertently share private data or suggest harmful actions. For example, an unchecked agent could reveal personal information from its context. To address this, teams add guardrails: filter outputs, enforce privacy rules, and encode ethical policies into the agent’s framework. Ensuring that the agent behaves safely and fairly is a major ongoing challenge.

Integration with external systems

The power of agents comes from linking to other software, but integrating different systems is complex. Each external API or database has its own format and security requirements. Ensuring seamless, secure connections between the agent and tools requires engineering effort. Agents must handle errors like timeouts or data mismatches gracefully. Achieving smooth interoperability and maintaining security (e.g. proper authorization) adds substantial complexity to deployment.

Performance and Scalability

LLM agents can be resource-intensive. Running an agent that uses a large model plus multiple API calls can be slow and costly. Scaling up for many users or real-time interactions is challenging. Enterprises must balance model size, response time, and cost. Techniques like model distillation or caching intermediate results can help, but performance remains a key barrier to large-scale use. Optimizing compute and ensuring responsive performance is an active area of development.

Strengthening LLM-Powered Autonomous Agents with TAG

Table-Augmented Generation (TAG) is a technique to enhance agents by giving them access to structured data. With TAG, an agent can query multiple database tables in real time and include that information in its reasoning. For example, a customer service agent might use TAG to pull the latest account and order data from a database when answering a query. This means the agent’s decisions are grounded in live, up-to-date data across systems. By fusing information from multiple tables into the prompt, TAG helps agents respond more accurately and confidently, especially for tasks like complex data lookup or multi-system coordination.

Platform and Case studies

ChemCrow: domain-specific scientific agent

ChemCrow (Bran et al. 2023) is an LLM-based agent designed for organic chemistry. It equips an LLM with 13 expert chemistry tools (such as reaction predictors and databases) to design synthetic pathways. Users give ChemCrow a goal like “synthesize compound X,” and the agent generates a plan using its toolkit. In evaluations, expert chemists rated ChemCrow’s solutions as much more chemically correct than those of a plain GPT-4 model. This shows that a specialized agent with domain tools can outperform general LLMs on complex scientific tasks.

Generative Agents: Simulating Human Behavior

Generative Agents (Park et al. 2023) is a research project where each agent is an autonomous LLM-driven character in a virtual environment. There are 25 agents (“people”) that observe the world, remember experiences, and plan actions. Each agent has a memory stream (an external diary of events), a retrieval model to recall relevant memories, and a reflection mechanism to draw higher-level conclusions from memories. The result is a mini “sandbox town” where agents exhibit human-like behaviors: they remember conversations, form relationships, and coordinate events (like hosting a party) over time. This case study highlights how adding memory and planning to LLMs can create realistic, autonomous characters.

Vendor and Platform Examples (e.g., K2view)

Several commercial platforms now support building LLM agents. For instance, K2view GenAI Data Fusion is a product that integrates LLM agents with enterprise data sources. It dynamically assembles prompts by pulling real-time data (customer information, transactions, etc.) from various databases, enabling context-aware responses. Other vendors like Kubiya and IBM offer orchestration tools to manage agents and workflows. These platforms let organizations deploy agents for customer service, data analysis, or IT tasks, combining LLMs with their own data and rules. For example, Kubiya’s platform has been used to create specialized automation agents in logistics and HR, and IBM’s Watsonx Agents provide templates for automating common enterprise processes. These examples illustrate how the agent framework is moving from research into real-world solutions.

Conclusion

At Designveloper, we see LLM powered autonomous agents not just as a technological trend but as a cornerstone of the future of digital transformation. These systems are changing how businesses operate, innovate, and deliver value.

As a leading web and software development company in Vietnam, we’ve already helped companies across the globe adopt cutting-edge AI solutions. With more than 200+ completed projects spanning industries such as healthcare, finance, and education, we know what it takes to bring complex AI-powered systems into production. Our work on platforms like Lumin PDF, used by over 70 million users worldwide, proves our ability to deliver scalable and reliable solutions that stand the test of real-world demands.

We understand that building llm powered autonomous agents requires more than theory. It demands a deep integration of planning, memory, tool use, and safety into enterprise-ready systems. Our expertise in AI, SaaS development, and custom software solutions means we can guide businesses from idea to deployment. Whether it’s designing AI-powered assistants, workflow automation tools, or domain-specific agents, we tailor each solution to fit our clients’ unique challenges.